HTML('<iframe width="560" height="315" src="https://www.youtube.com/embed/cJOtrHtzDSU?start=7193" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>')fast.ai Chapter 6: Bear Classifier

In Chapter 6, we learned to train an image recognition model for multi-label classification. In this notebook, I will apply those concepts to the bear classifier from Chapter 2.

I’ll place the prompt of the “Further Research” section here and then answer each part.

Retrain the bear classifier using multi-label classification. See if you can make it work effectively with images that don’t contain any bears, including showing that information in the web application. Try an image with two kinds of bears. Check whether the accuracy on the single-label dataset is impacted using multi-label classification.

Here’s a video walkthrough of this notebook:

Setup

from fastai.vision.all import *import fastai

import pandas as pd

fastai.__version__'2.3.0'from google.colab import drive

drive.mount('/content/gdrive')Mounted at /content/gdriveI have three different CSVs with Google Image URLs, one each for black, brown and grizzly bears. The script below, taken from the book, creates a directory for each of the three types of bears in the bears folder, and then downloads the corresponding bear type’s images into that directory.

path = Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears')

bear_types = ['black', 'grizzly', 'teddy']

if not path.exists():

path.mkdir()

for o in bear_types:

dest = path/o

dest.mkdir(exist_ok=True)

download_images(f'/content/gdrive/MyDrive/fastai-course-v4/images/bears/{o}', url_file=Path(f'/content/gdrive/MyDrive/fastai-course-v4/download_{o}.csv'))# confirm that `get_image_files` retrieves all images

fns = get_image_files(path)

fns(#535) [Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000002.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000000.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000001.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000003.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000004.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000005.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000007.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000008.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000010.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000009.jpg')...]# verify all images

failed = verify_images(fns)

failed(#0) []Since I may need to move files around if they are incorrectly labeled, I’m going to prepend the filenames with the corresponding bear type.

import os

for dir in os.listdir(path):

for f in os.listdir(path/dir):

os.rename(path/dir/f, path/dir/f'{dir}_{f}')fns = get_image_files(path)

fns(#723) [Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000002.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000000.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000001.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000003.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000004.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000005.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000006.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000007.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000008.jpg'),Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears/black/black_00000010.jpg')...]Single-Label Classifier

I’ll train the single-digit classifier as we did in Chapter 2.

# create DataBlock

bears = DataBlock(

blocks=(ImageBlock, CategoryBlock),

get_items=get_image_files,

splitter=RandomSplitter(valid_pct=0.2, seed=42),

get_y=parent_label,

item_tfms=RandomResizedCrop(224, min_scale=0.5))# create DataLoaders

dls = bears.dataloaders(path)

dls.valid.show_batch(max_n=4, nrows=1)

# verify train batch

dls.train.show_batch(max_n=4, nrows=1)

# first training

# use it to clean the data

learn = cnn_learner(dls, resnet18, metrics=error_rate)

learn.fine_tune(4)| epoch | train_loss | valid_loss | error_rate | time |

|---|---|---|---|---|

| 0 | 1.367019 | 0.252684 | 0.080645 | 00:05 |

| epoch | train_loss | valid_loss | error_rate | time |

|---|---|---|---|---|

| 0 | 0.179421 | 0.175091 | 0.056452 | 00:04 |

| 1 | 0.155954 | 0.165824 | 0.048387 | 00:04 |

| 2 | 0.119193 | 0.173681 | 0.056452 | 00:04 |

| 3 | 0.098313 | 0.170383 | 0.048387 | 00:04 |

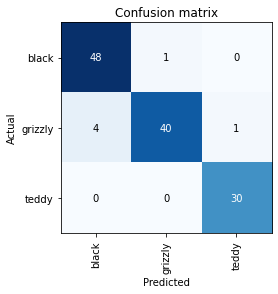

# view confusion matrix

interp = ClassificationInterpretation.from_learner(learn)

interp.plot_confusion_matrix()

Initial training: Clean the Dataset

# plot highest loss images

interp.plot_top_losses(5, nrows=1)

Some of these images are infographics containing text, illustrations and other non-photographic bear data. I’ll delete those using the cleaner

from fastai.vision.widgets import *# view highest loss images

# using ImageClassifierCleaner

cleaner = ImageClassifierCleaner(learn)

cleaner# unlink images with "<Delete>" selected in the cleaner

for idx in cleaner.delete(): cleaner.fns[idx].unlink()# move any images reclassified in the cleaner

for idx, cat in cleaner.change(): shutil.move(str(cleaner.fns[idx]), path/cat)After a few rounds of quickly training the model and using the cleaner, I was able to remove or change a couple dozen of the images. I’ll use lr.find() and re-train the model.

Second Training with Cleaned Dataset

path = Path('/content/gdrive/MyDrive/fastai-course-v4/images/bears')

# create DataLoaders

dls = bears.dataloaders(path)

#verify validation batch

dls.valid.show_batch(max_n=4, nrows=1)#verify training batch

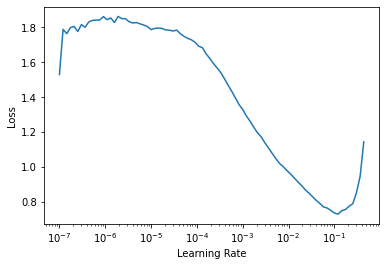

dls.train.show_batch(max_n=4, nrows=1)# find learning rate

learn = cnn_learner(dls, resnet18, metrics=error_rate)

learn.lr_find()SuggestedLRs(lr_min=0.012022644281387329, lr_steep=0.0005754399462603033)

# verify loss function

learn.loss_funcFlattenedLoss of CrossEntropyLoss()# fit one cycle

lr = 1e-3

learn.fit_one_cycle(5, lr)| epoch | train_loss | valid_loss | error_rate | time |

|---|---|---|---|---|

| 0 | 1.405979 | 0.418305 | 0.145161 | 00:04 |

| 1 | 0.803087 | 0.214286 | 0.056452 | 00:04 |

| 2 | 0.557531 | 0.169275 | 0.048387 | 00:04 |

| 3 | 0.408410 | 0.163632 | 0.056452 | 00:04 |

| 4 | 0.321682 | 0.164792 | 0.040323 | 00:04 |

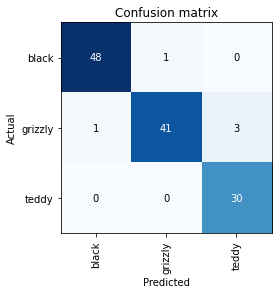

# view confusion matrix

interp = ClassificationInterpretation.from_learner(learn)

interp.plot_confusion_matrix()

# show results

learn.show_results()

::: {#cell-41 .cell 0=‘e’ 1=‘x’ 2=‘p’ 3=‘o’ 4=‘r’ 5=‘t’ 6=’ ’ 7=‘t’ 8=‘h’ 9=‘e’ 10=’ ’ 11=‘m’ 12=‘o’ 13=‘d’ 14=‘e’ 15=‘l’}

learn.export(fname=path/'single_label_bear_classifier.pkl'):::

Multi-Label Classifier

There are three major differences between training a multi-label classification model and a single-label model on this dataset. I present them in a table here:

| Classification Model Type | Dependent Variable | Loss Function | get_y function |

|---|---|---|---|

| Single-label | Decoded string | Cross Entropy (softmax) | parent_label |

| Multi-label | One-hot Encoded List | Binary Cross Entropy (sigmoid with threshold) | [parent_label] |

# create helper function

def get_y(o): return [parent_label(o)]# create DataBlock

bears = DataBlock(

blocks=(ImageBlock, MultiCategoryBlock),

get_items=get_image_files,

splitter=RandomSplitter(valid_pct=0.2, seed=42),

get_y=get_y,

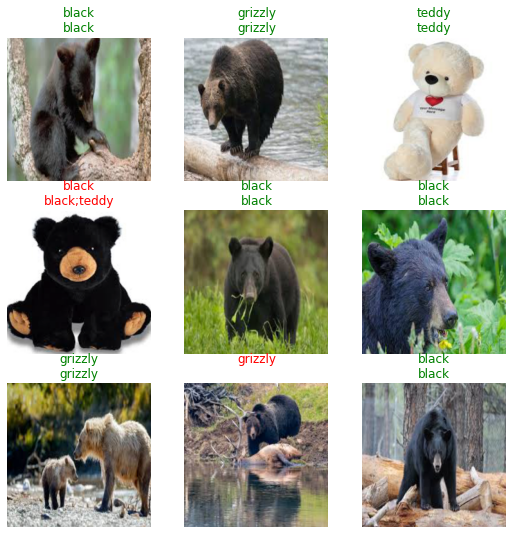

item_tfms=RandomResizedCrop(224, min_scale=0.5))# view validation batch

dls = bears.dataloaders(path)

dls.show_batch()

# find learning rate

learn = cnn_learner(dls, resnet18, metrics=partial(accuracy_multi,thresh=0.95), loss_func=BCEWithLogitsLossFlat(thresh=0.5))

learn.lr_find()Downloading: "https://download.pytorch.org/models/resnet18-5c106cde.pth" to /root/.cache/torch/hub/checkpoints/resnet18-5c106cde.pth# verify loss function

learn.loss_funcFlattenedLoss of BCEWithLogitsLoss()lr = 2e-2

learn.fit_one_cycle(5, lr)| epoch | train_loss | valid_loss | accuracy_multi | time |

|---|---|---|---|---|

| 0 | 0.478340 | 0.436599 | 0.937695 | 00:51 |

| 1 | 0.289231 | 0.642520 | 0.887850 | 00:03 |

| 2 | 0.203213 | 0.394335 | 0.897196 | 00:03 |

| 3 | 0.159622 | 0.155405 | 0.959502 | 00:02 |

| 4 | 0.132379 | 0.090879 | 0.965732 | 00:02 |

# verify results

learn.show_results()

::: {#cell-51 .cell 0=‘e’ 1=‘x’ 2=‘p’ 3=‘o’ 4=‘r’ 5=‘t’ 6=’ ’ 7=‘m’ 8=‘o’ 9=‘d’ 10=‘e’ 11=‘l’}

learn.export(path/'multi_label_bear_classifier.pkl'):::

Model Inference

path = Path('/content/gdrive/MyDrive/fastai-course-v4/images')Image with a Single Bear

# grizzly bear image

img = PILImage.create(path/'test'/'grizzly_test_1.jpg')

img

# load learners

single_learn_inf = load_learner(path/'bears'/'single_label_bear_classifier.pkl')

multi_learn_inf = load_learner(path/'bears'/'multi_label_bear_classifier.pkl')# single label classification

single_learn_inf.predict(img)('teddy', tensor(2), tensor([1.7475e-04, 3.7727e-04, 9.9945e-01]))# multi label classification

multi_learn_inf.predict(img)((#1) ['grizzly'],

tensor([False, True, False]),

tensor([6.3334e-05, 1.0000e+00, 1.4841e-04]))Image with Two Bears

# image with grizzly and black bear

img = PILImage.create(path/'test'/'.jpg')

img# single label classification

single_learn_inf.predict(img)# multi label classification

multi_learn_inf.predict(img)# image with grizzly and teddy bear

img = PILImage.create(path/'test'/'.jpg')

img# single label classification

single_learn_inf.predict(img)# multi label classification

multi_learn_inf.predict(img)# image with black and teddy bear

img = PILImage.create(path/'test'/'.jpg')

img# single label classification

single_learn_inf.predict(img)# multi label classification

multi_learn_inf.predict(img)Images without Bears

path = Path('/content/gdrive/MyDrive/fastai-course-v4/images/')

img = PILImage.create(path/'test'/'computer.jpg')

img

sigle_learn_inf.predict(img)((#0) [], tensor([False, False, False]), tensor([0.1316, 0.1916, 0.0004]))single_learn_inf.predict(img)[2].sum()tensor(1.)# set loss function threshold to 0.9

multi_learn_inf.predict(img)((#0) [], tensor([False, False, False]), tensor([0.0275, 0.0196, 0.8457]))