vishal bakshi

welcome to my blog.

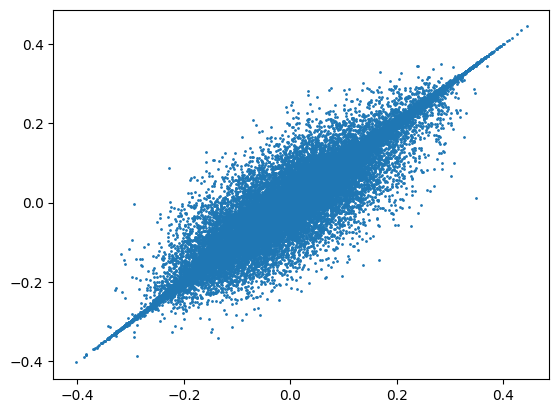

Logit Divergence Between Models Differently Converted to torch.bfloat16

python

deep learning

TIL: Custom Composer Callback to Push Checkpoints to HuggingFace Hub During Training.

LLM

Custom Composer Callback

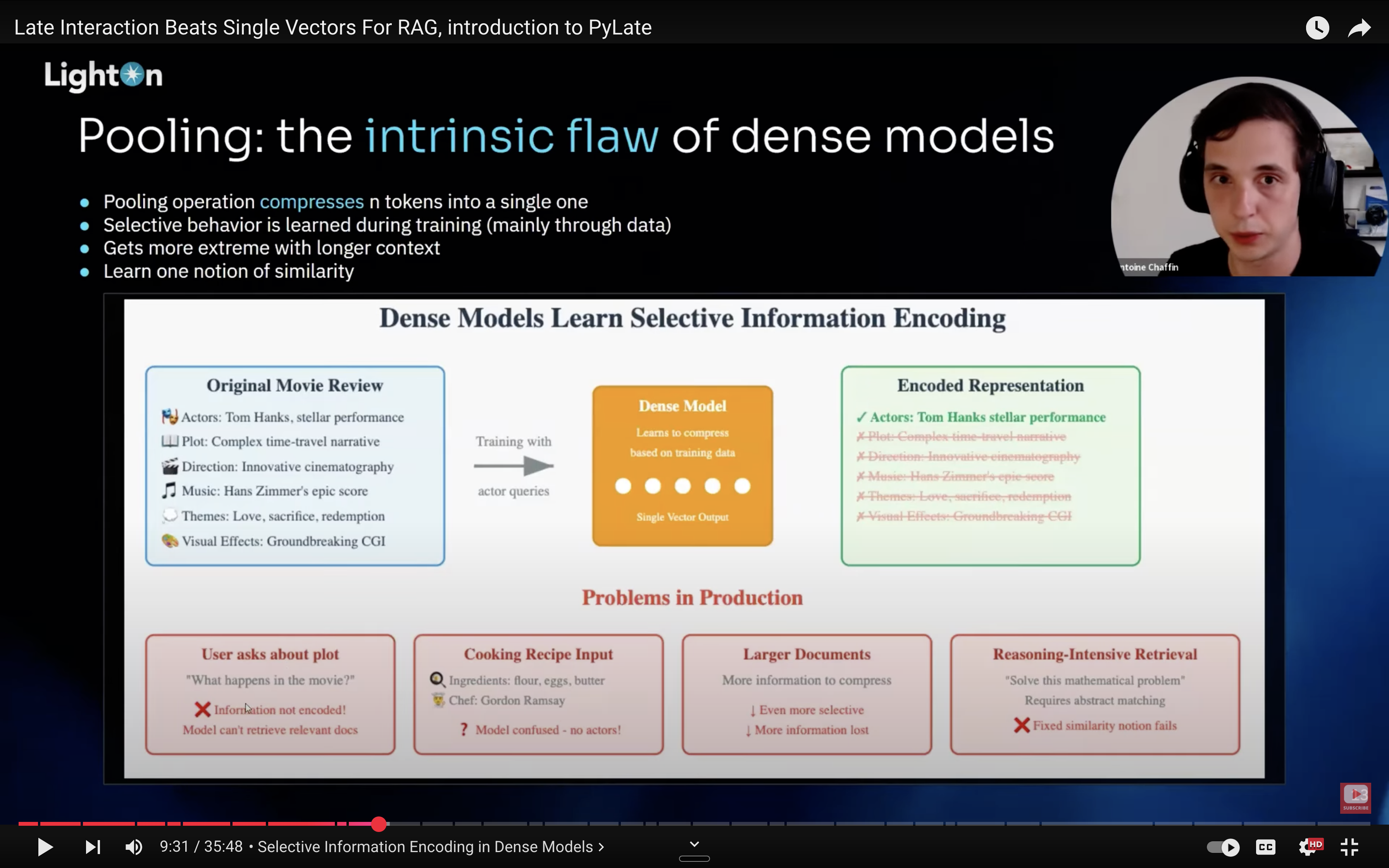

Revisiting ColBERTv1 : A Return to First Principles

ColBERT

information retrieval

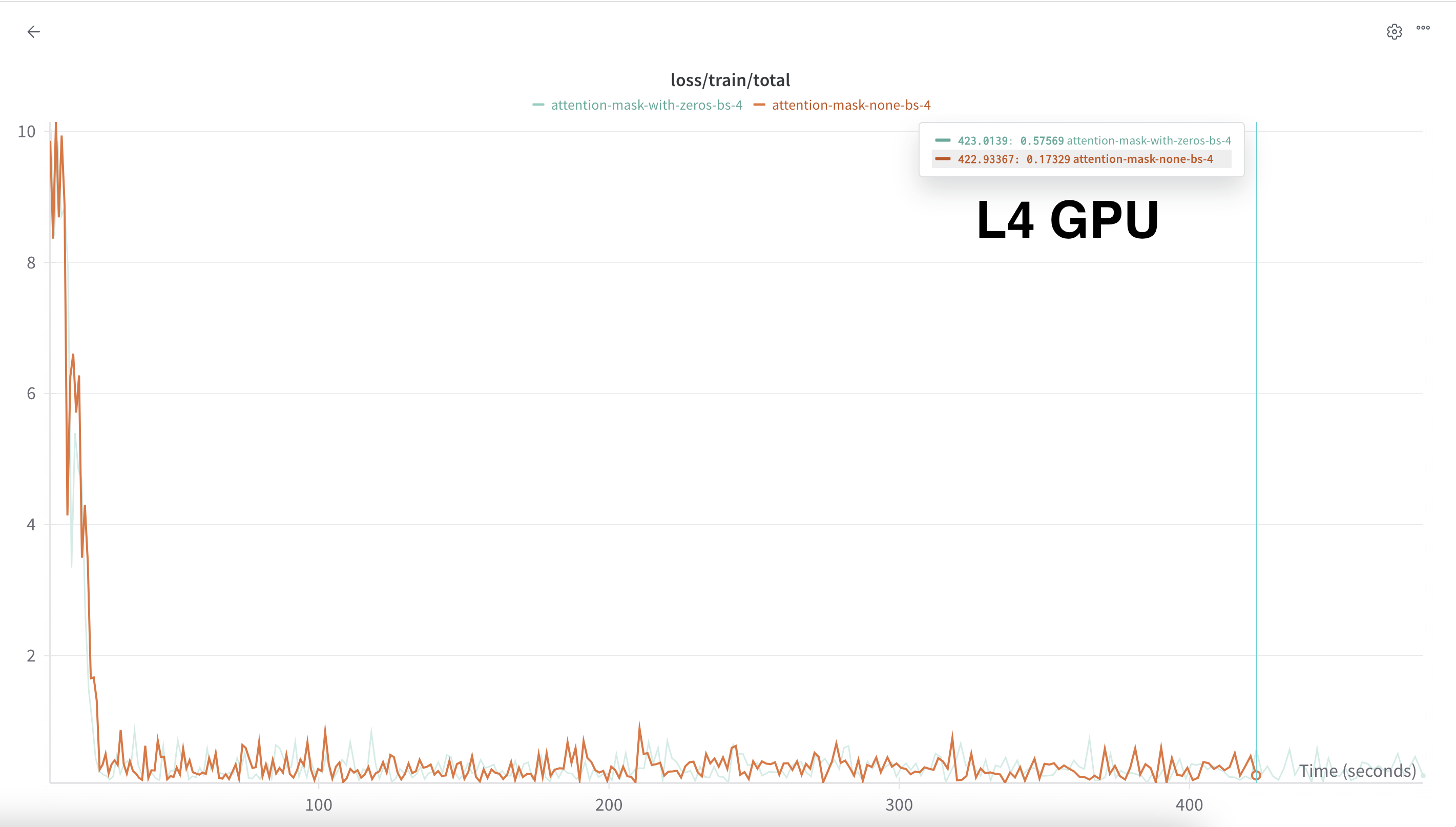

Debugging Flash Attention in LLM-Foundry (and a 20% Slow Down!)

python

deep learning

LLM-Foundry

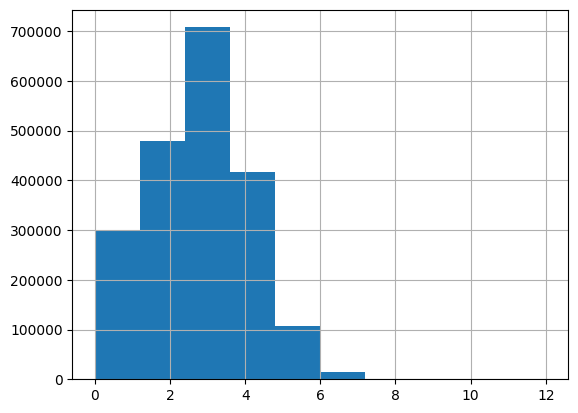

Takeaways from Gemini Deep Research Report on Small Batch Training Challenges

python

fastai

imagenette

TinyScaleLab

deep learning

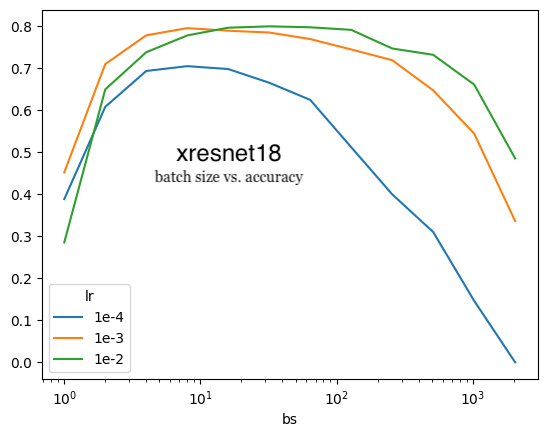

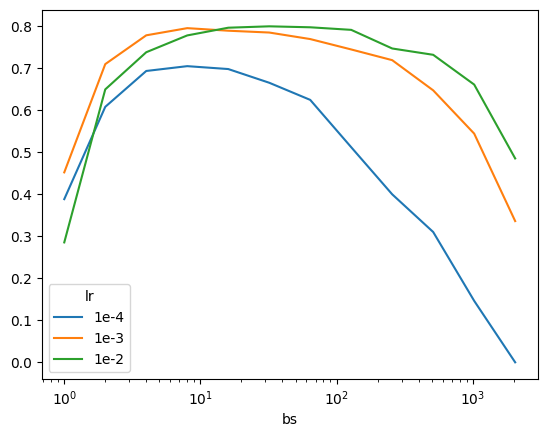

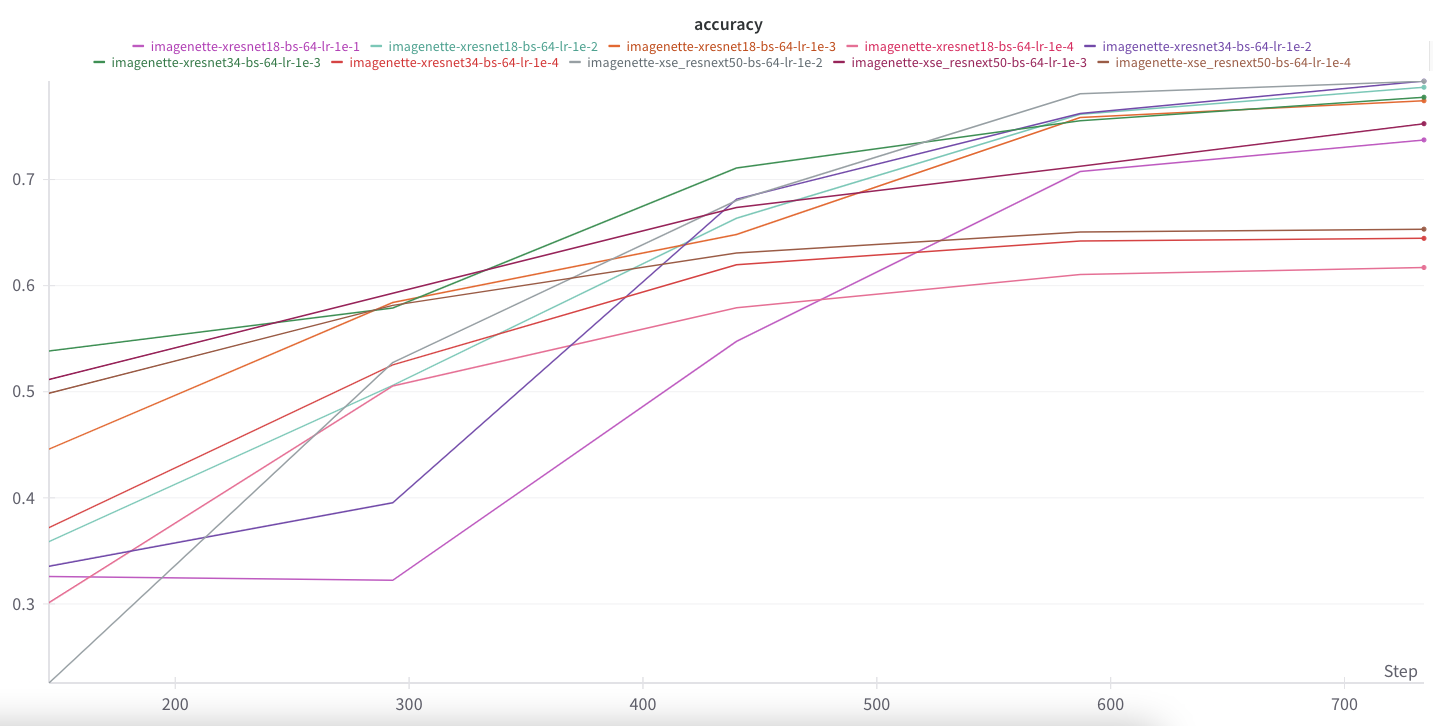

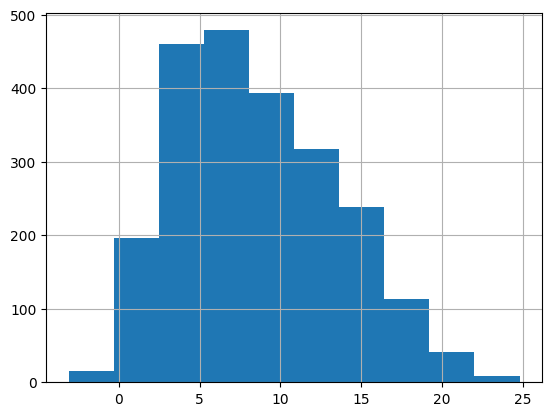

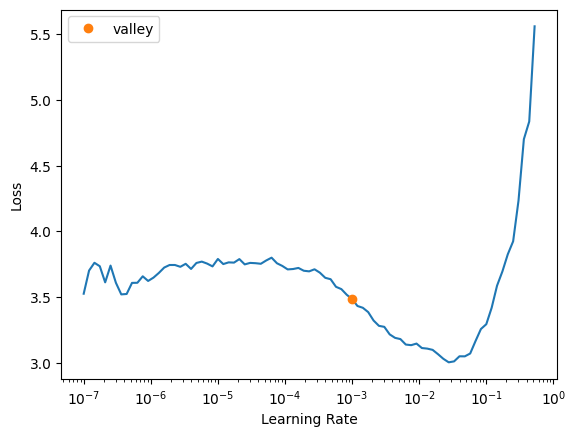

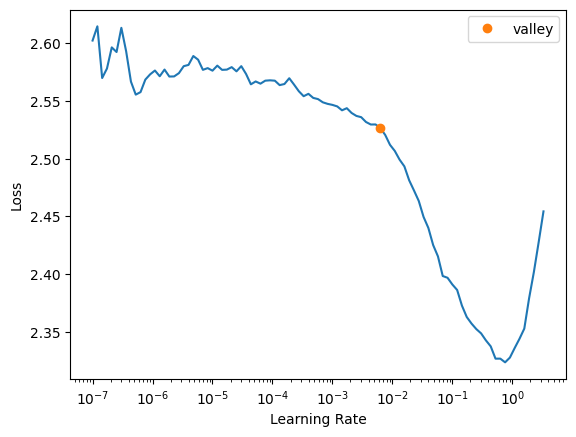

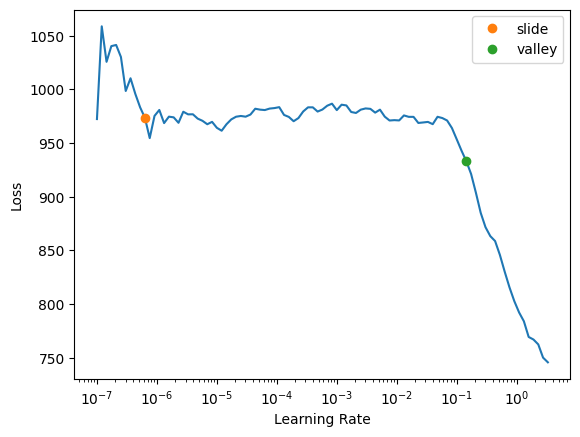

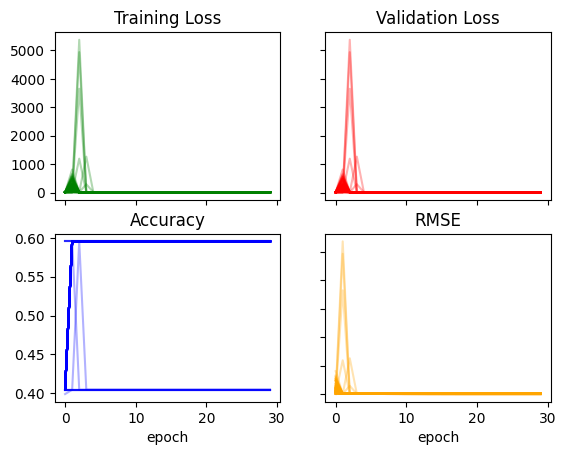

An Analysis of Batch Size vs. Learning Rate on Imagenette

python

fastai

deep learning

TinyScaleLab

imagenette

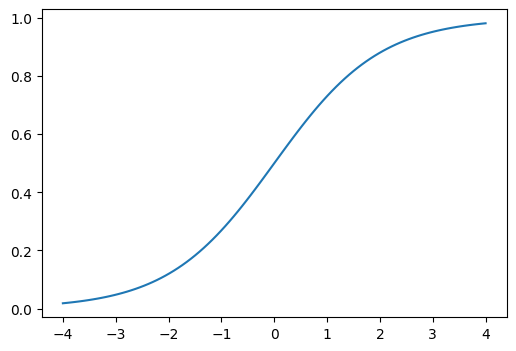

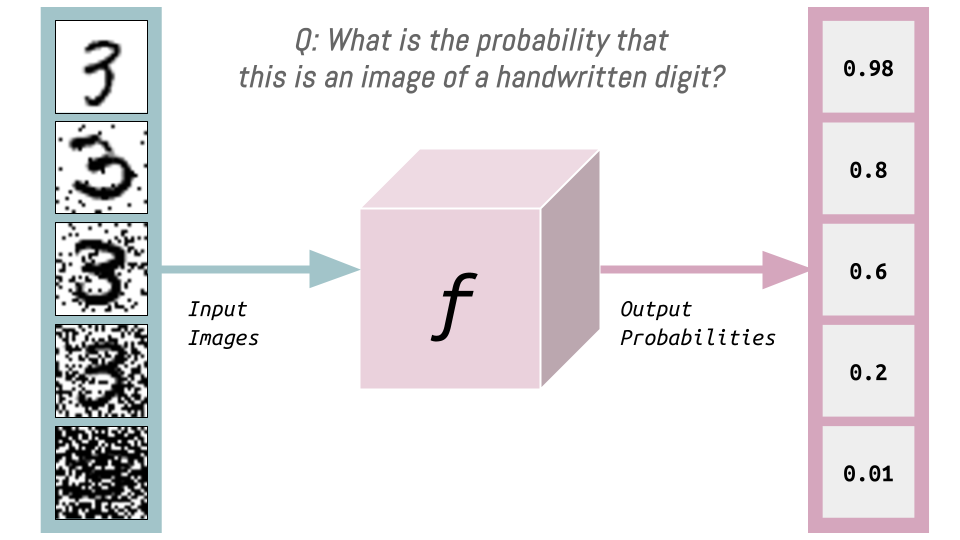

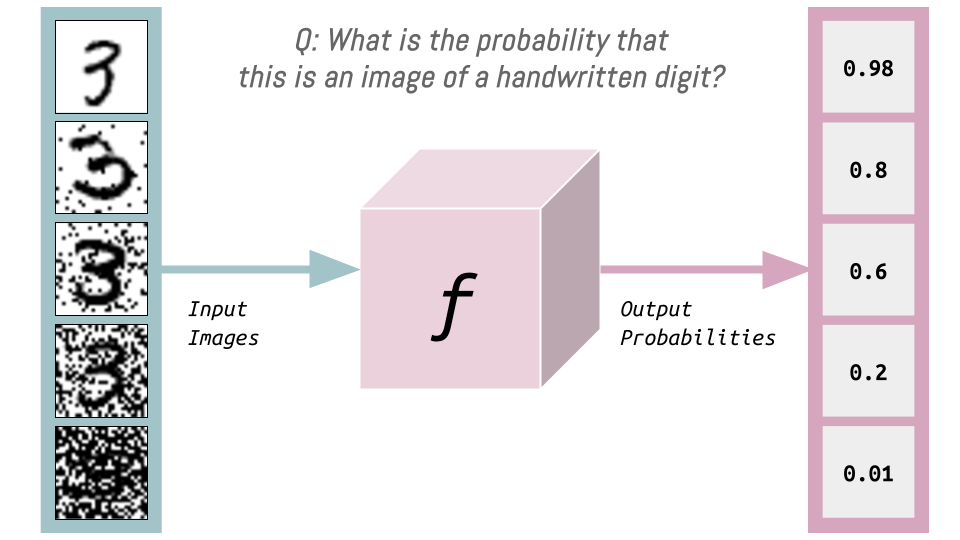

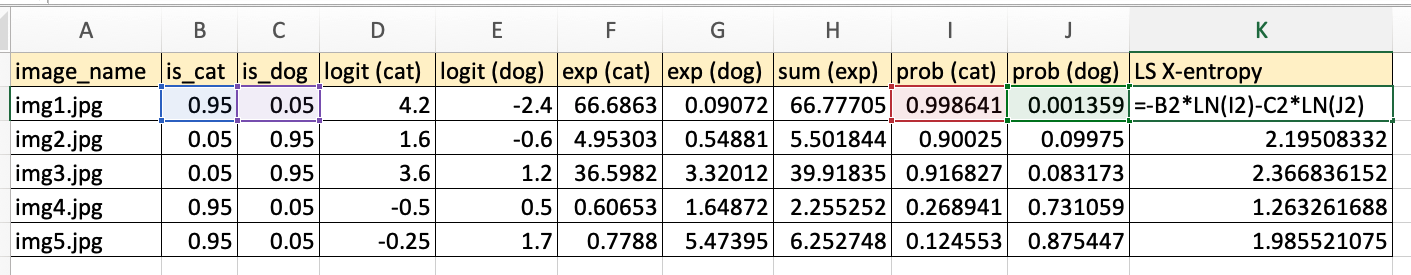

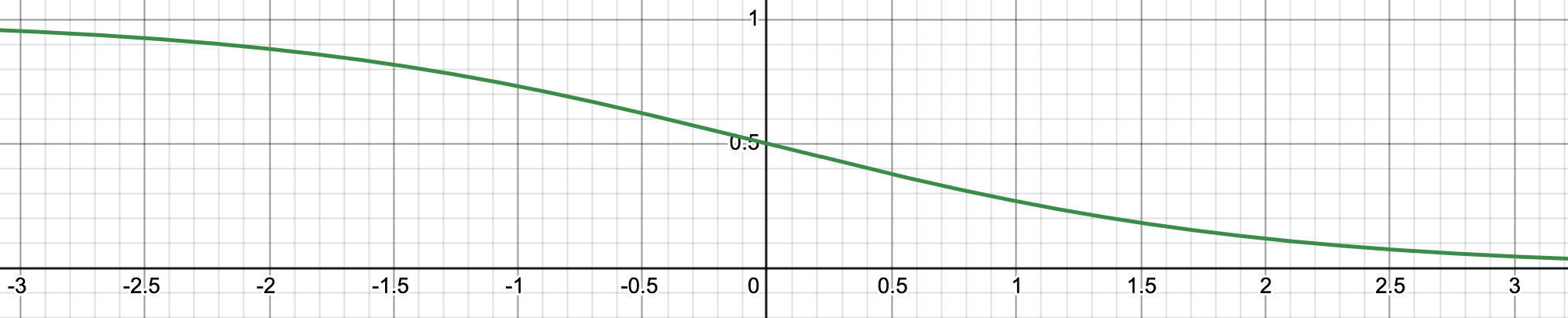

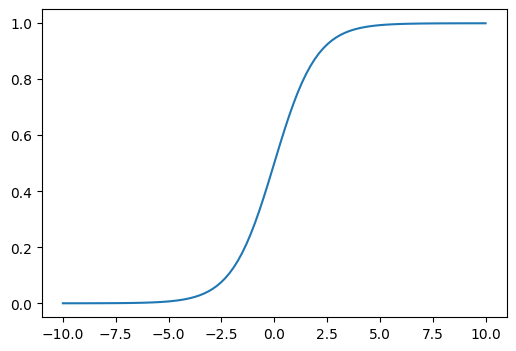

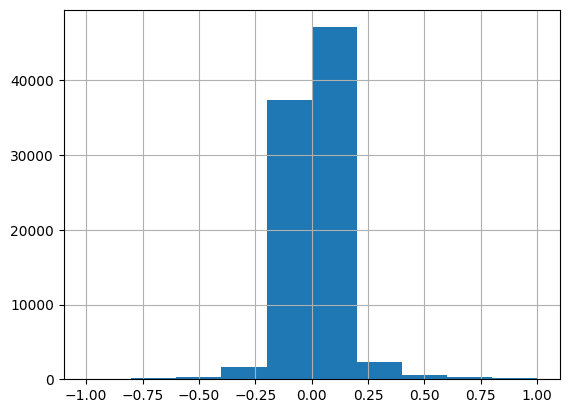

Cross Entropy Loss Explained

python

deep learning

fastai

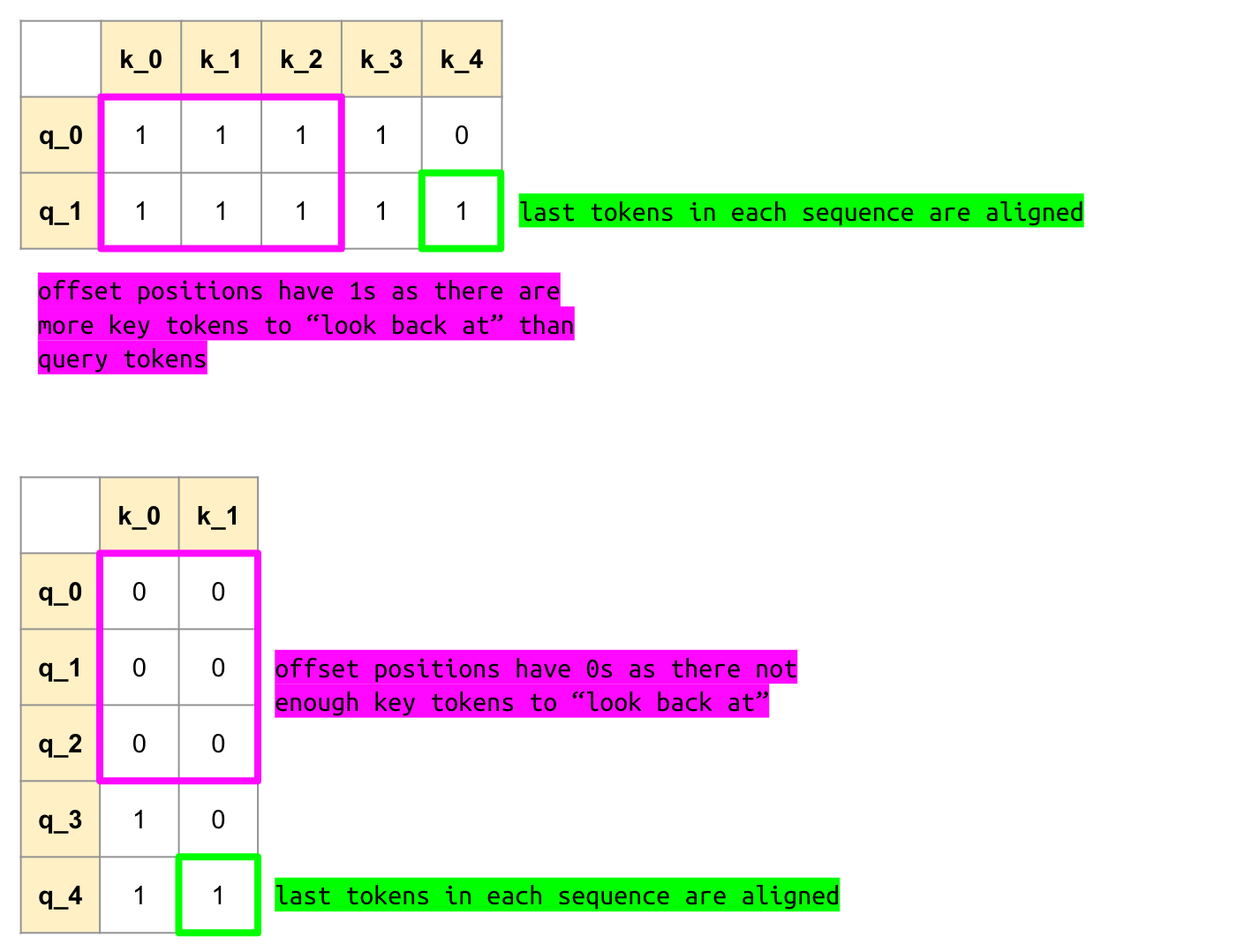

HuggingFace’s Default KV Cache and the flash_attn_varlen_func Docstring

python

deep learning

Flash Attention

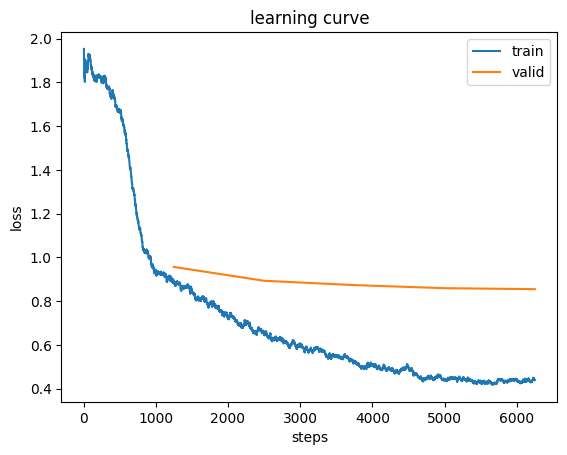

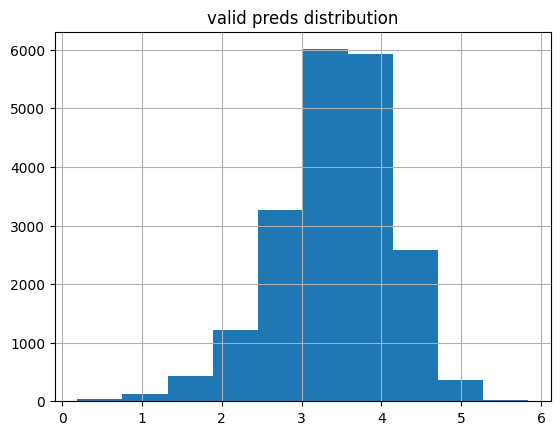

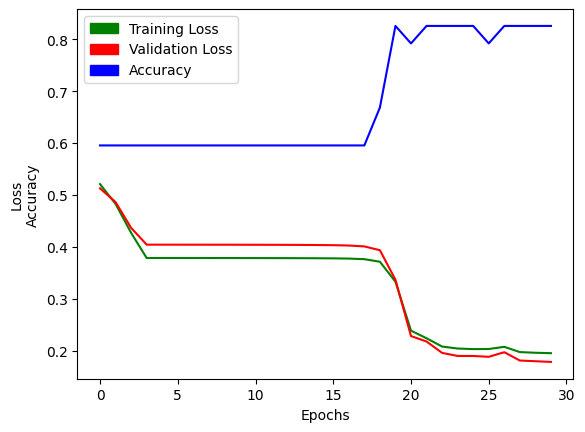

Initial Experiments with Imagenette

python

fastai

deep learning

TinyScaleLab

imagenette

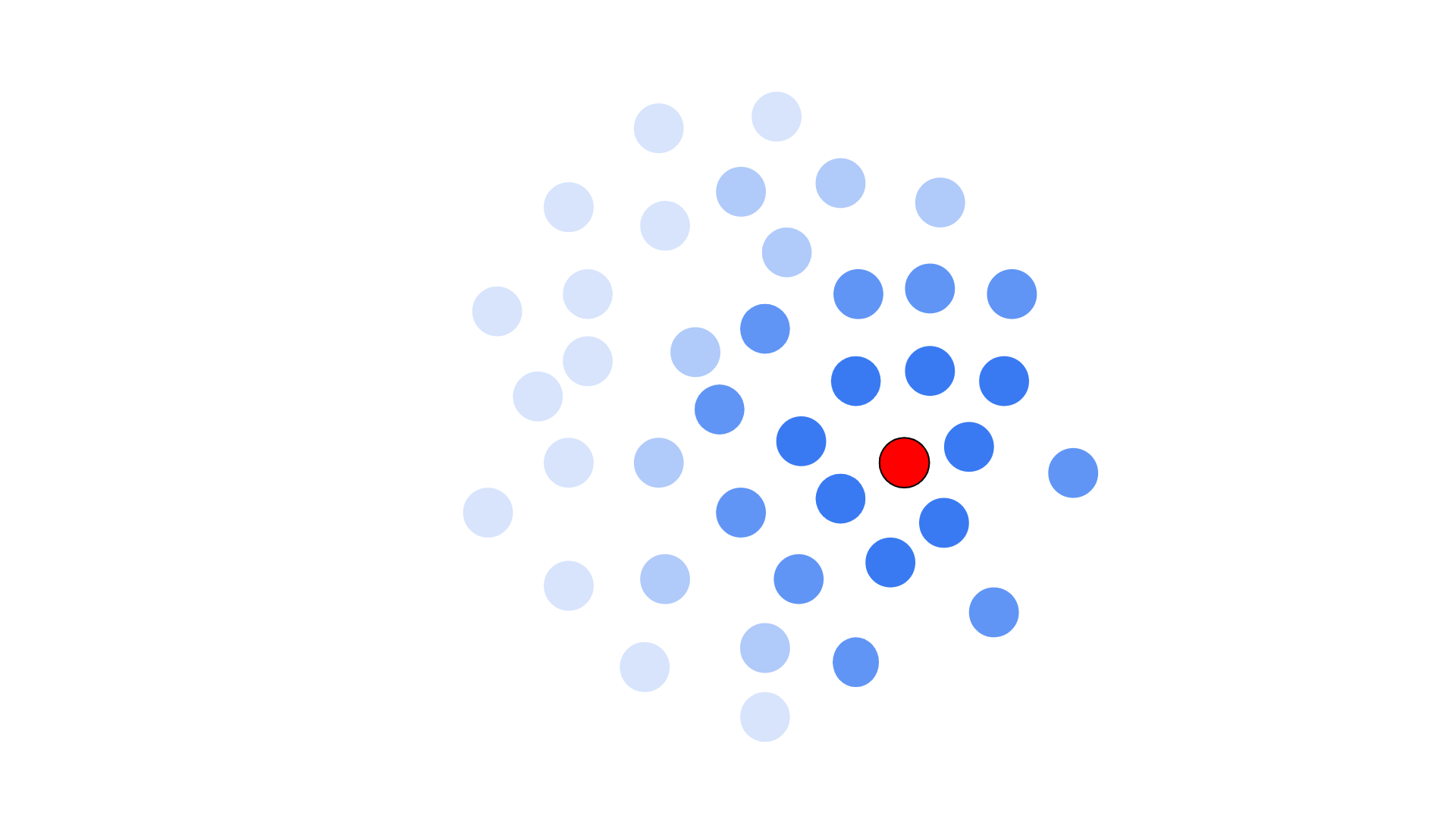

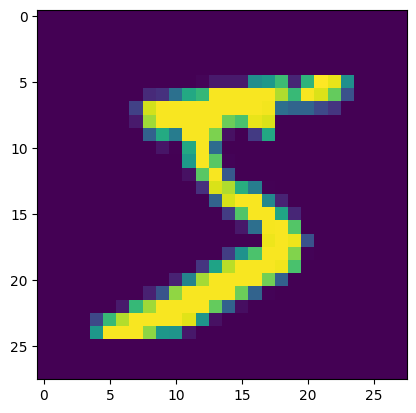

Understanding the Mean Shift Clustering Algorithm (and PyTorch Broadcasting)

python

machine learning

Exploring Precision in ColBERT Indexing and Retrieval

python

deep learning

ColBERT

information retrieval

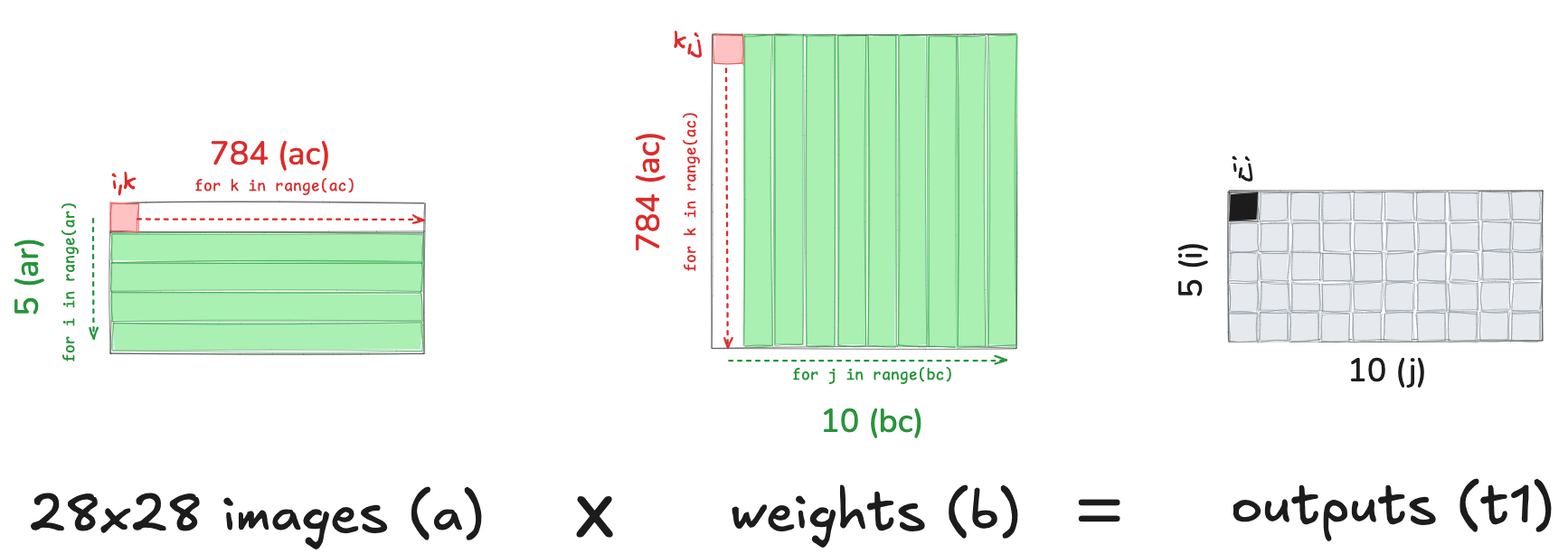

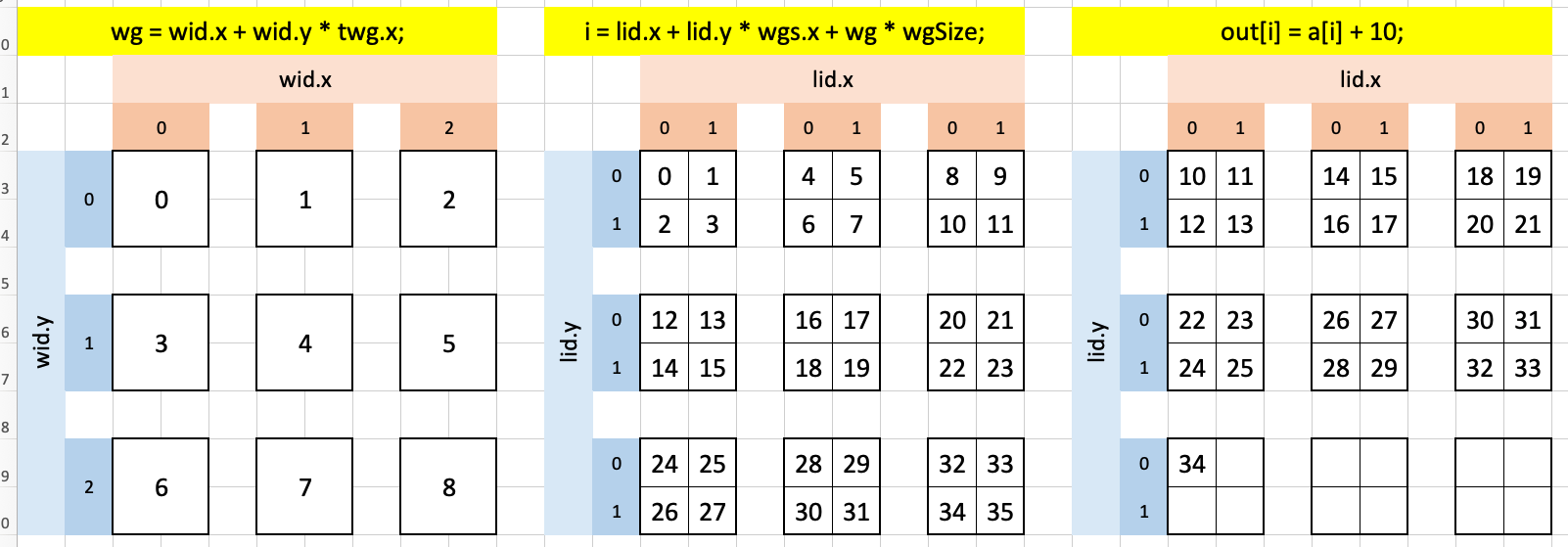

The Evolution of Matrix Multiplication (fastai course Part 2 Lessons 11 and 12)

python

deep learning

fastai

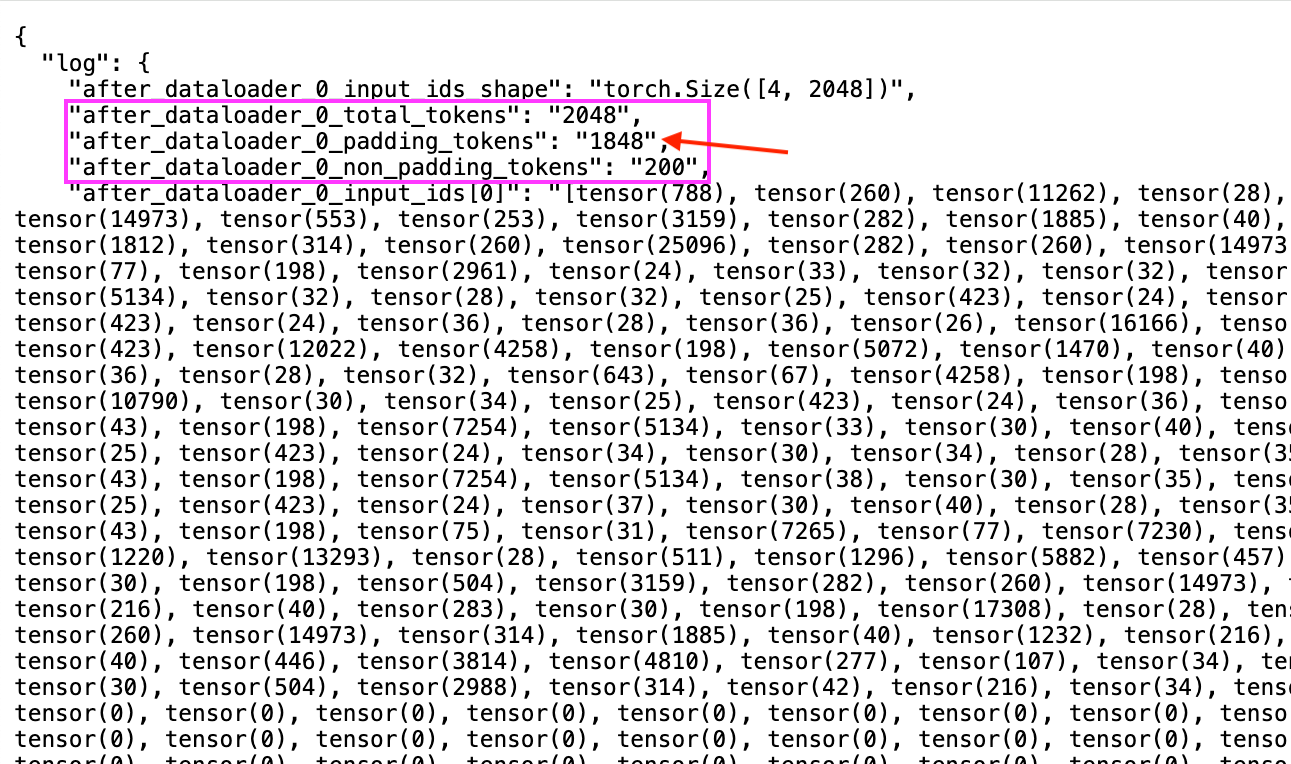

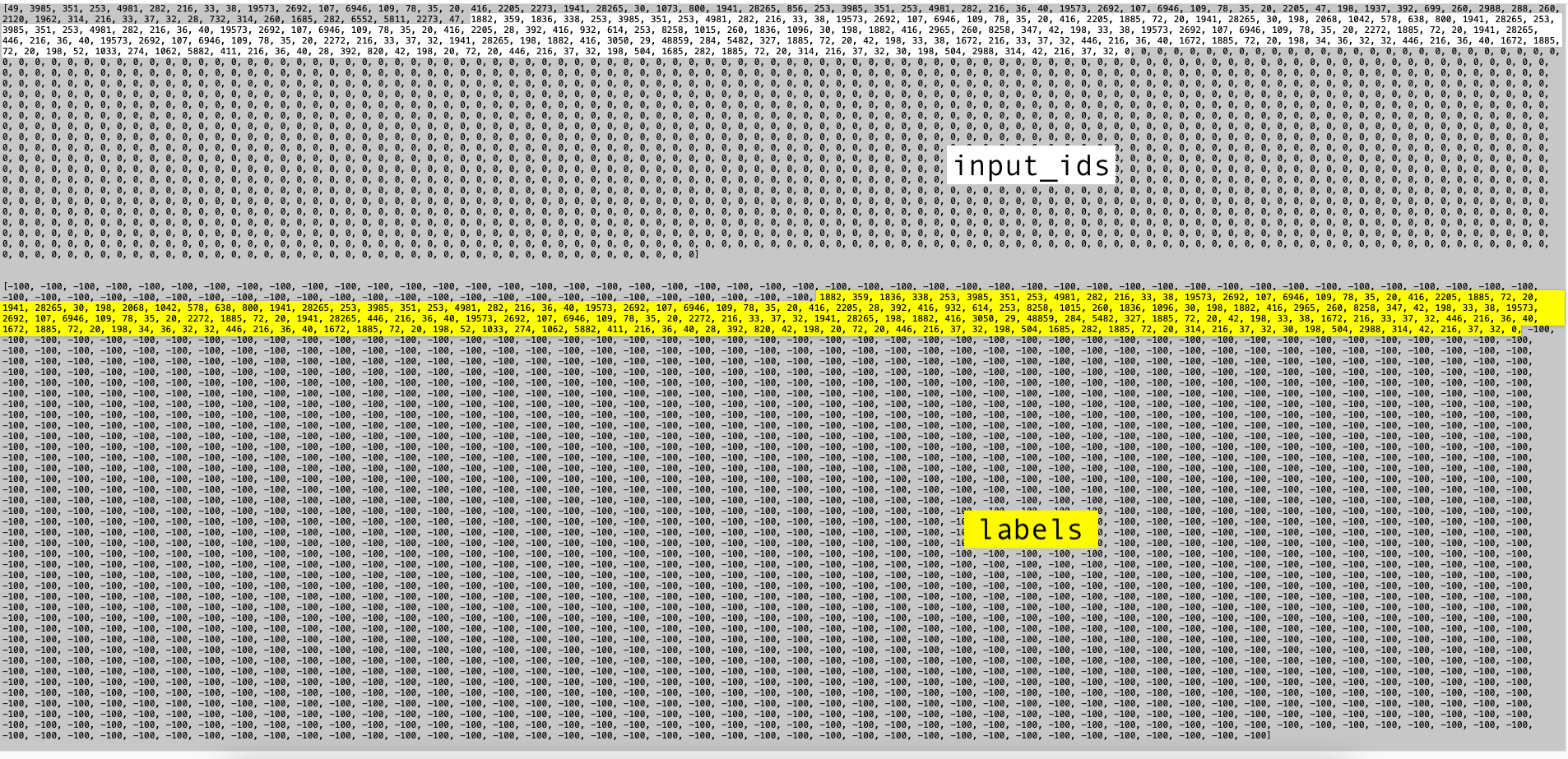

DataInspector with BinPackCollator: Inspecting Packed Dataloader Items

LLM

deep learning

LLM-Foundry

Custom Composer Callback

Comparing RAGatouille and ColBERT Indexes and Search Results

python

deep learning

information retrieval

RAGatouille

ColBERT

TIL: Resolving RAGatouille OOM Error and faiss-gpu Warning

information retrieval

deep learning

RAGatouille

Understanding Sequence Packing - Initial Musings

python

deep learning

LLM

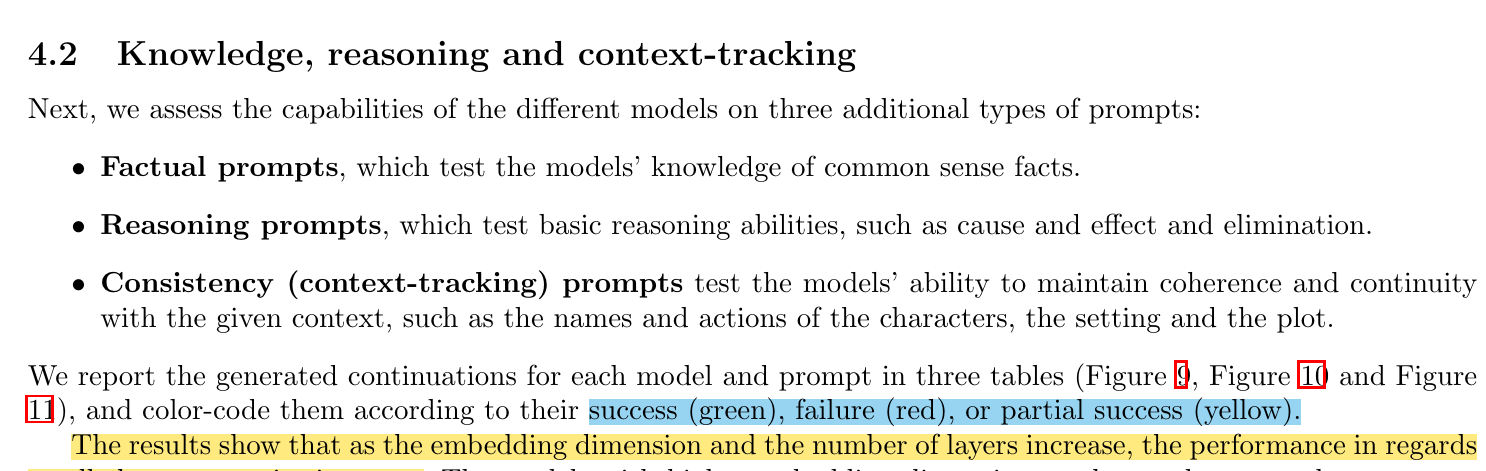

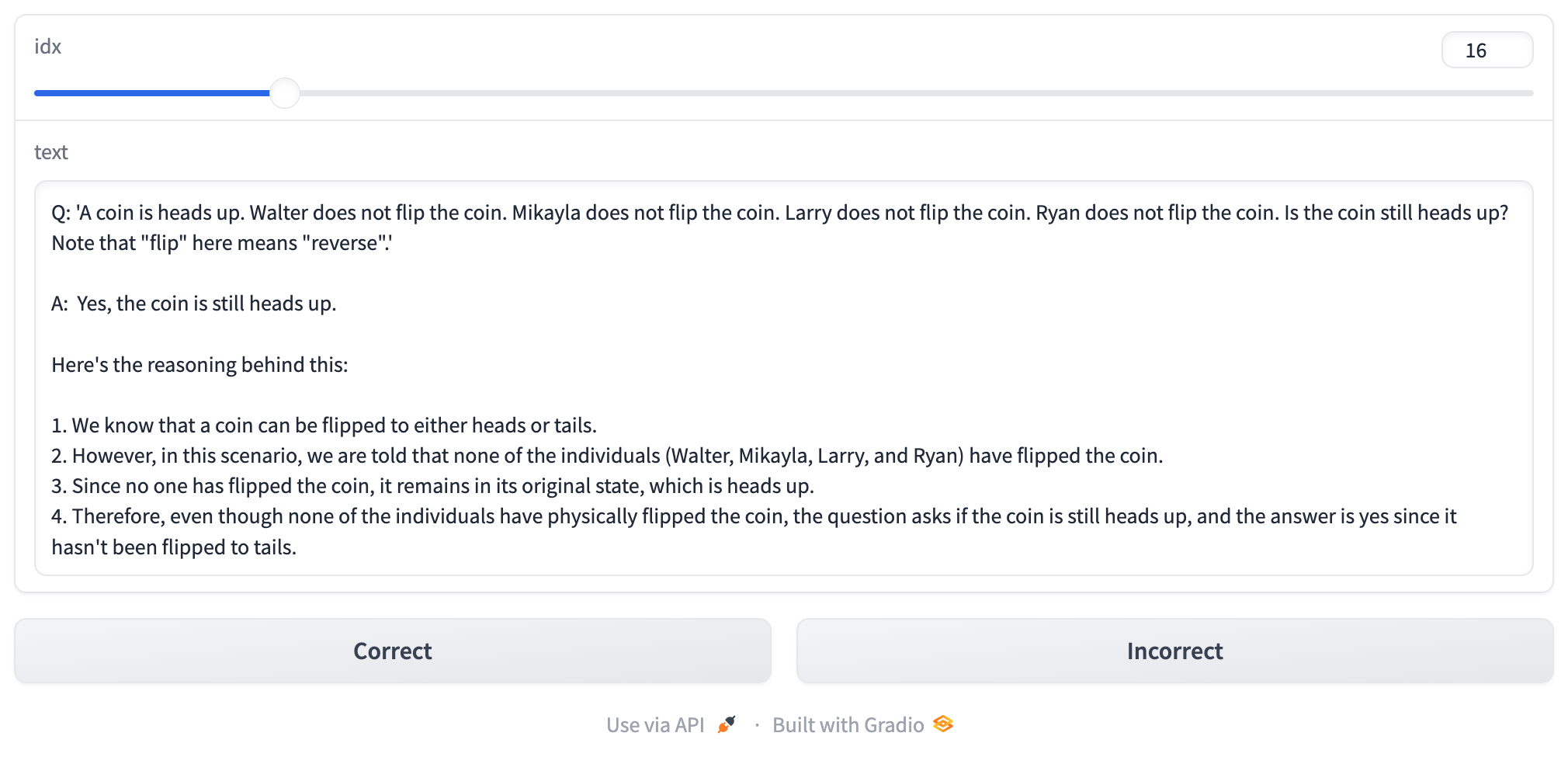

Initial Manual Scoring Results for TinyStories Models

LLM

deep learning

TinyScaleLab

Curating Evaluation Prompts, Defining Scoring Criteria, and Designing an LLM Judge Prompt Template

LLM

deep learning

TinyScaleLab

TinyScaleLab Update: Training Cost Analysis and Evaluation Infrastructure Plans

LLM

deep learning

TinyScaleLab

TinyScale Lab Update: Setting Eval Targets and Generating Completions for LLM Judge Development

LLM

deep learning

TinyScaleLab

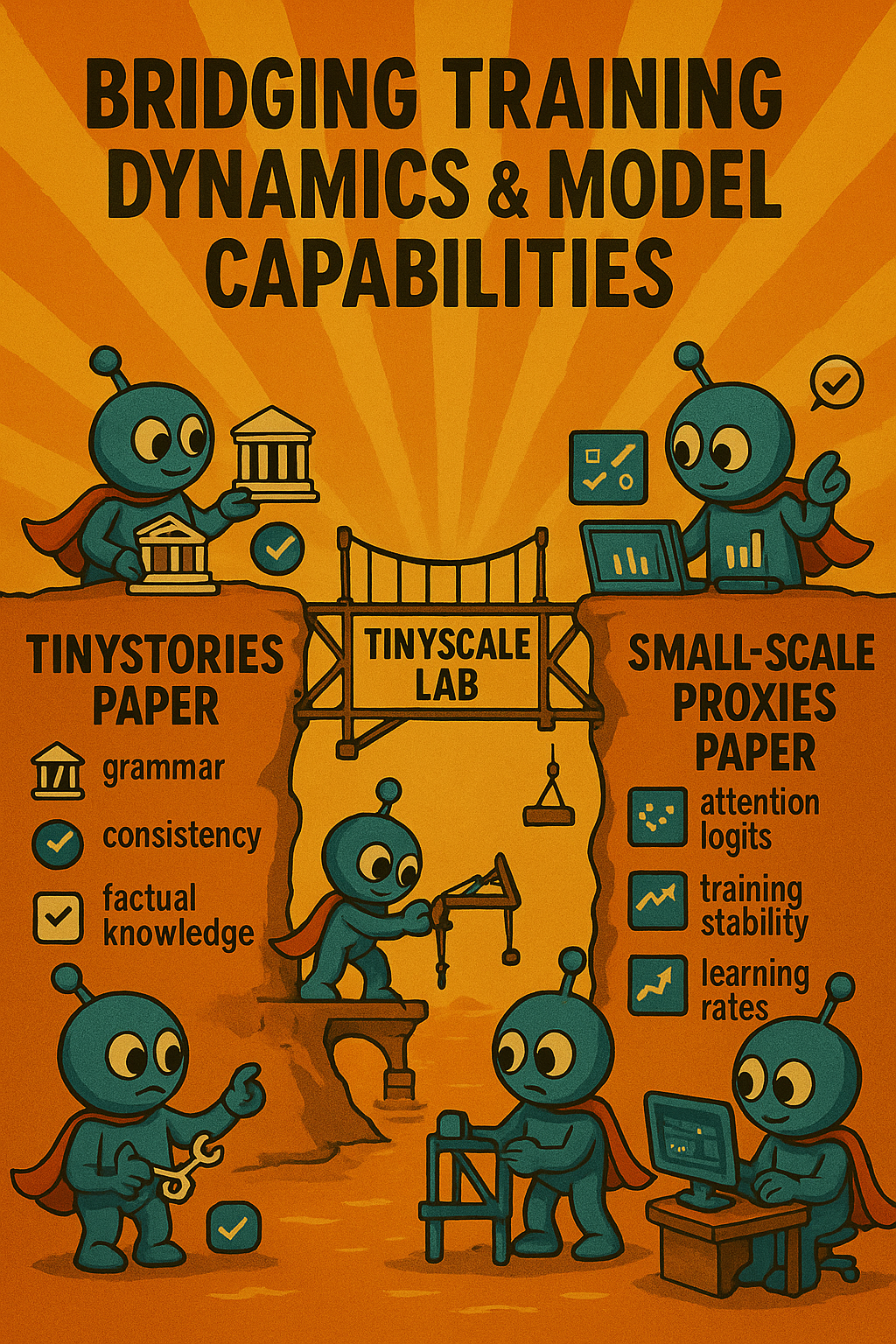

TinyScaleLab: Bridging Training Dynamics and Model Capabilities

LLM

deep learning

TinyScaleLab

LossInspector: A Deep Dive Into LLM-Foundry’s Next-Token Prediction with a Custom Composer Callback

python

deep learning

LLM

Understanding Python Descriptors

python

deep learning

LLM

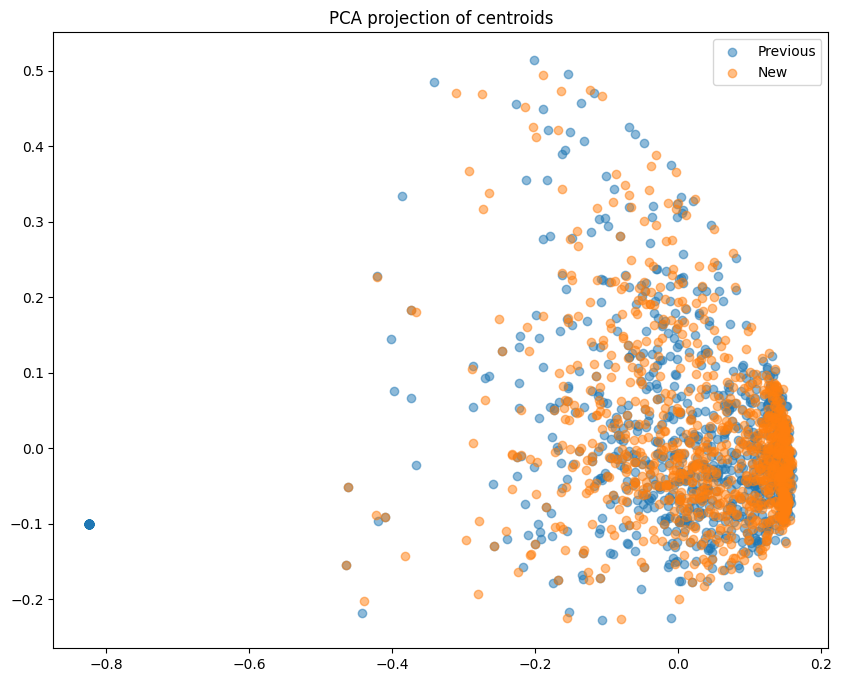

RAGatouille/ColBERT Indexing Deep Dive

python

information retrieval

machine learning

deep learning

RAGatouille

ColBERT

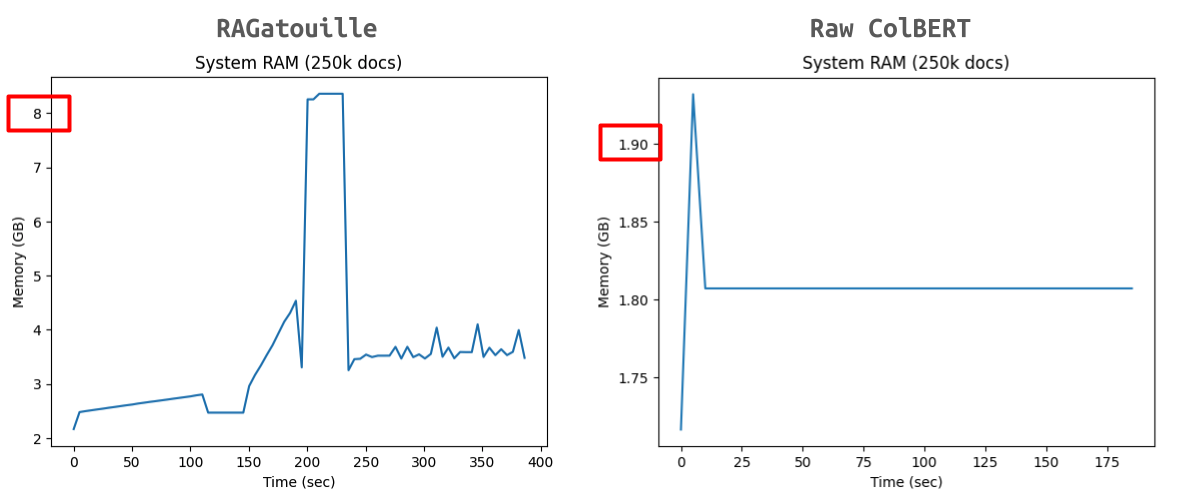

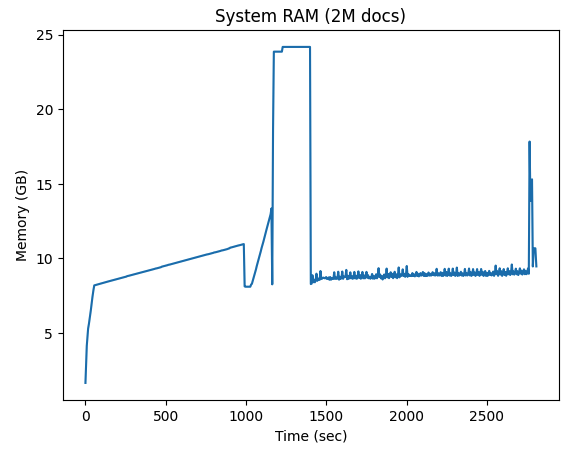

Memory Profiling raw ColBERT and RAGatouille

python

information retrieval

deep learning

RAGatouille

ColBERT

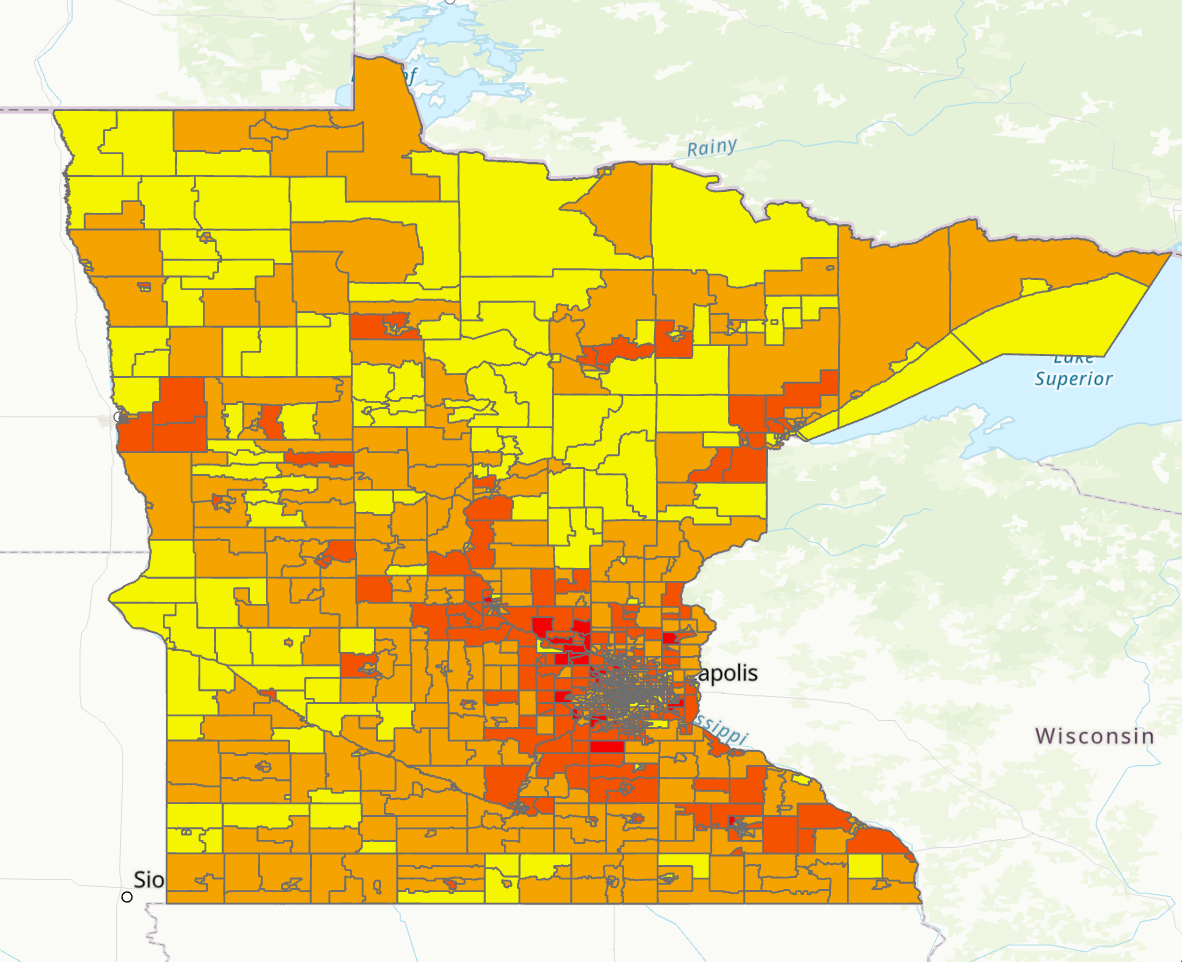

Estimating Storage and CPU RAM Requirements for Indexing 12.6M Documents

python

information retrieval

deep learning

RAGatouille

ColBERT

Evaluating the DAPR ConditionalQA Dataset with RAGatouille

python

information retrieval

deep learning

RAGatouille

ColBERT

DoRA’s Magnitude Vector

python

deep learning

machine learning

LLM

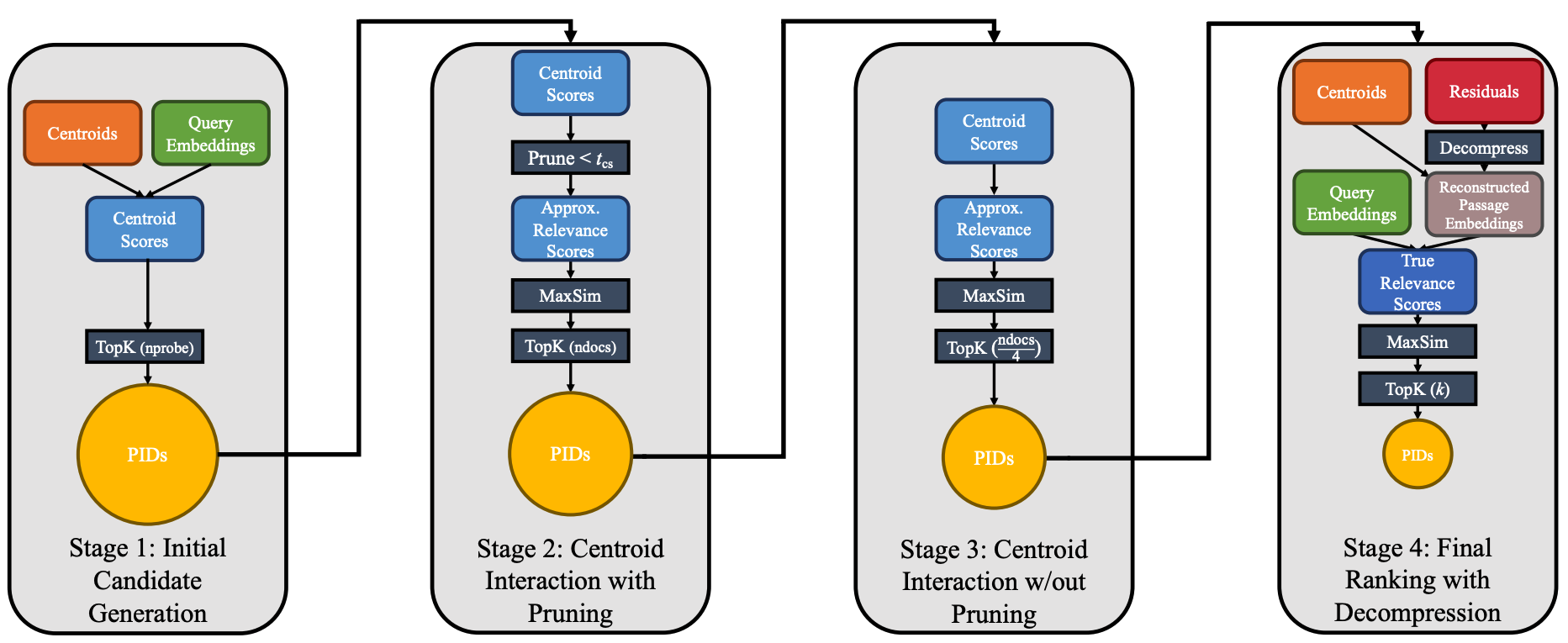

Recreating the PLAID ColBERTv2 Scoring Pipeline: From Research Code to RAGatouille

python

information retrieval

machine learning

deep learning

RAGatouille

ColBERT

Scoring Full Text and Semantic Search on Chunk Sizes from 100 to 2000 Tokens

python

RAG

information retrieval

fastbookRAG

Implementing Image-to-Image Generation for Stable Diffusion

python

stable diffusion

fastai

deep learning

machine learning

generative AI

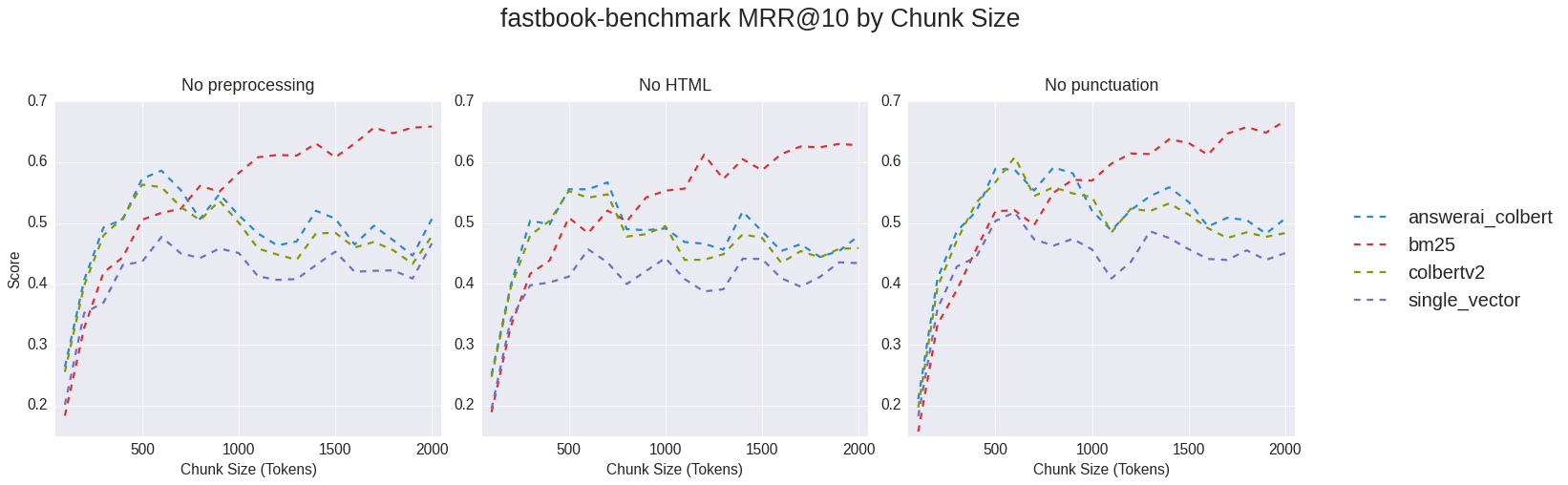

Evaluating 4 Retrieval Methods with 6 Chunking Strategies on my fastbook-benchmark Dataset

python

fastbookRAG

information retrieval

Implementing Negative Prompting for Stable Diffusion

python

stable diffusion

fastai

deep learning

machine learning

generative AI

Training Textual Inversion Embeddings on Some Samurai Jack Drawings

python

stable diffusion

deep learning

machine learning

Comparing Cosine Similarity Between Embeddings of Semantically Similar and Dissimilar Texts with Varying Punctuation

python

RAG

information retrieval

Establishing a Semantic Search (Embedding Cosine Similarity) Baseline for My fastbookRAG Project

python

RAG

information retrieval

fastbookRAG

Conducting a Question-by-Question Error Analysis on Semantic Search Results

python

RAG

information retrieval

fastbookRAG

Generating a GIF Animation Using Stable Diffusion

python

stable diffusion

fastai

deep learning

machine learning

generative AI

Calculating the Ratio of Gradients in an Image

python

computer vision

TypefaceClassifier

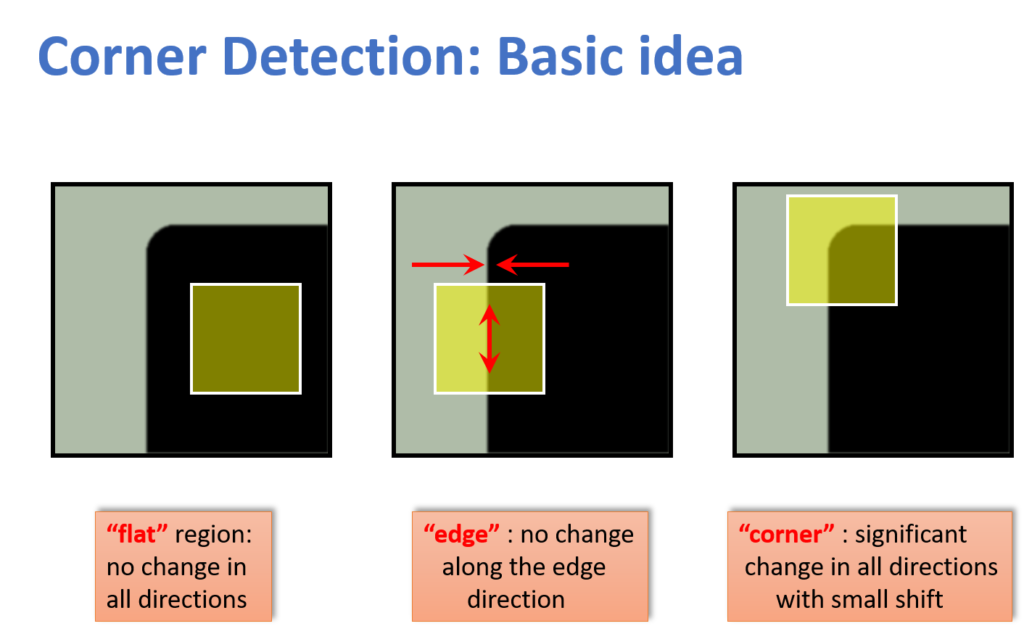

Calculating the Ratio of Corners in an Image

python

computer vision

TypefaceClassifier

Calculating the Ratio of 2D FFT Magnitude and Phase of a Text Image

python

computer vision

TypefaceClassifier

Conducting a Question-by-Question Error Analysis on Full Text Search Results

python

RAG

information retrieval

fastbookRAG

Establishing a Full Text Search (BM25) Baseline for My fastbookRAG Project

python

RAG

information retrieval

fastbookRAG

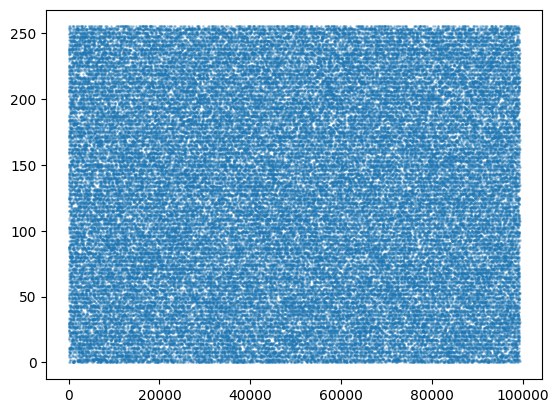

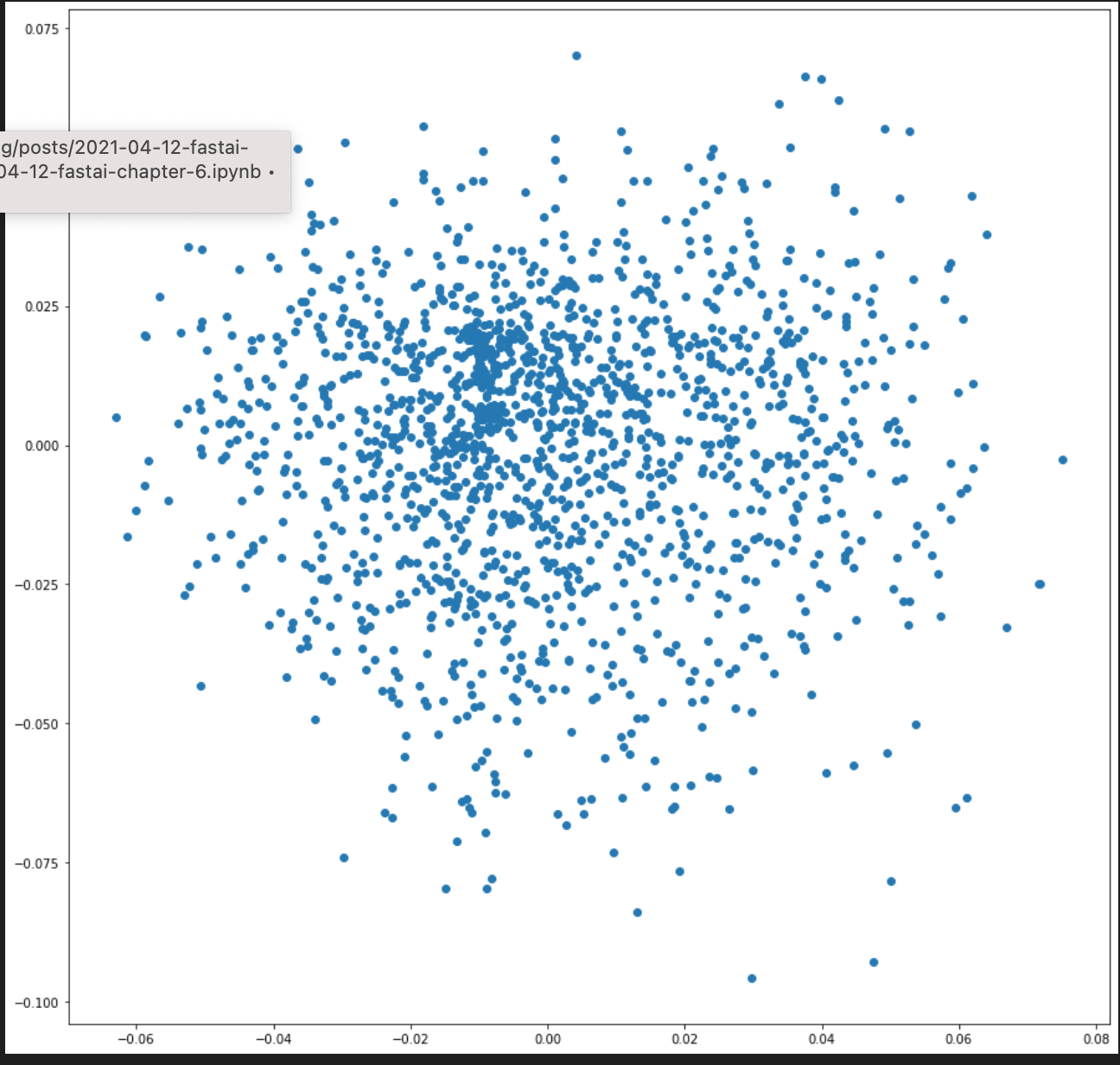

Comparing ~100k Random Numbers Generated with Different Methods

python

machine learning

deep learning

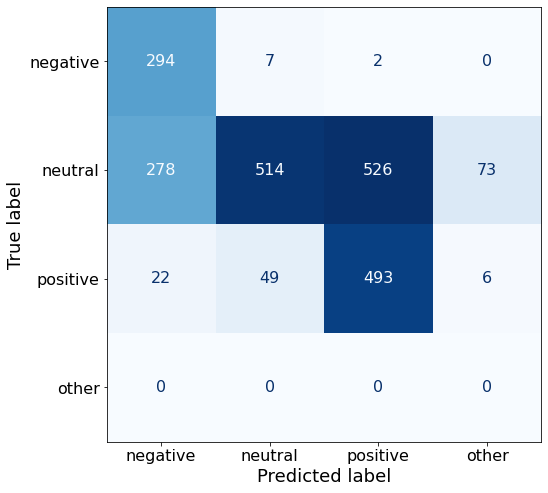

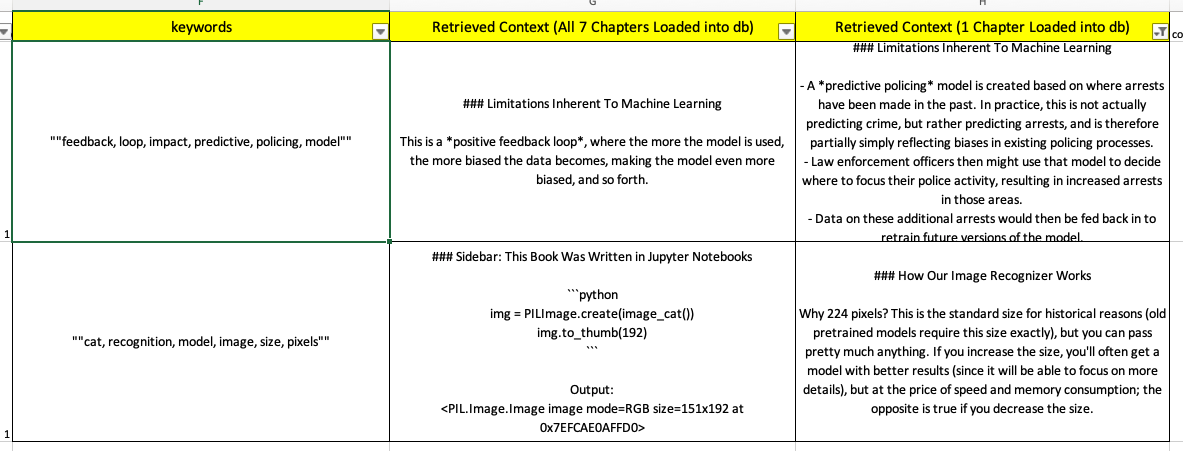

Iterating on Full Text Search Keywords using claudette

python

RAG

information retrieval

LLM

fastbookRAG

Generating Full Text Search Keywords using claudette

python

RAG

information retrieval

LLM

fastbookRAG

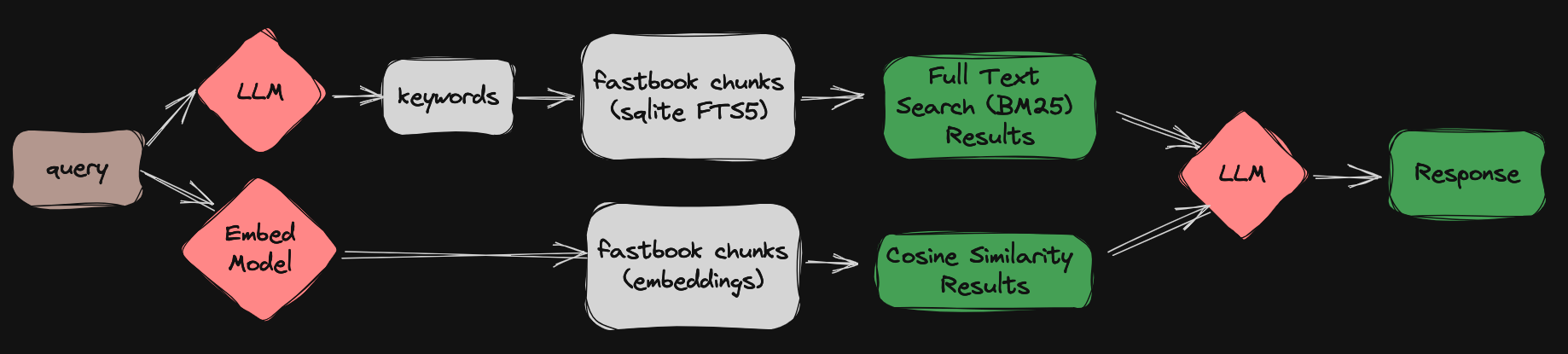

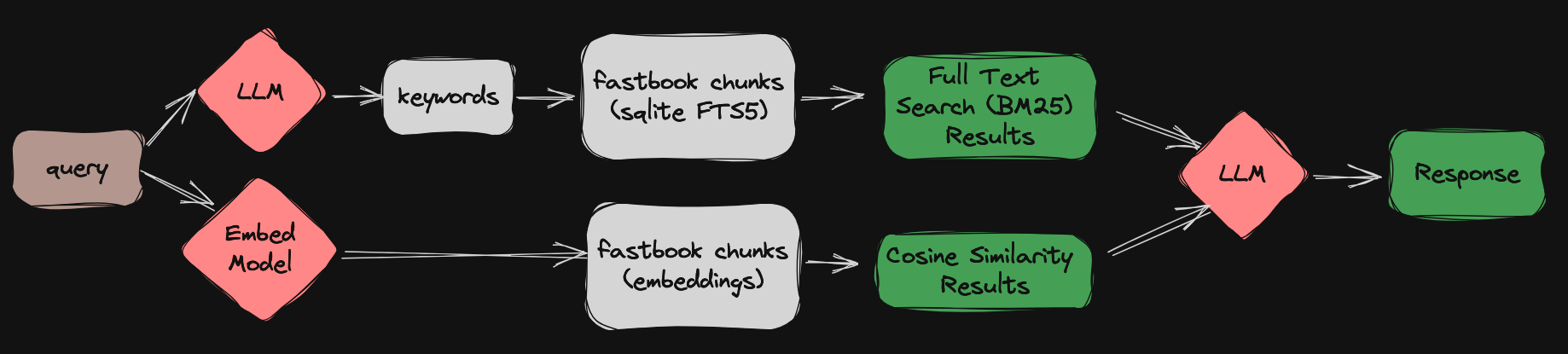

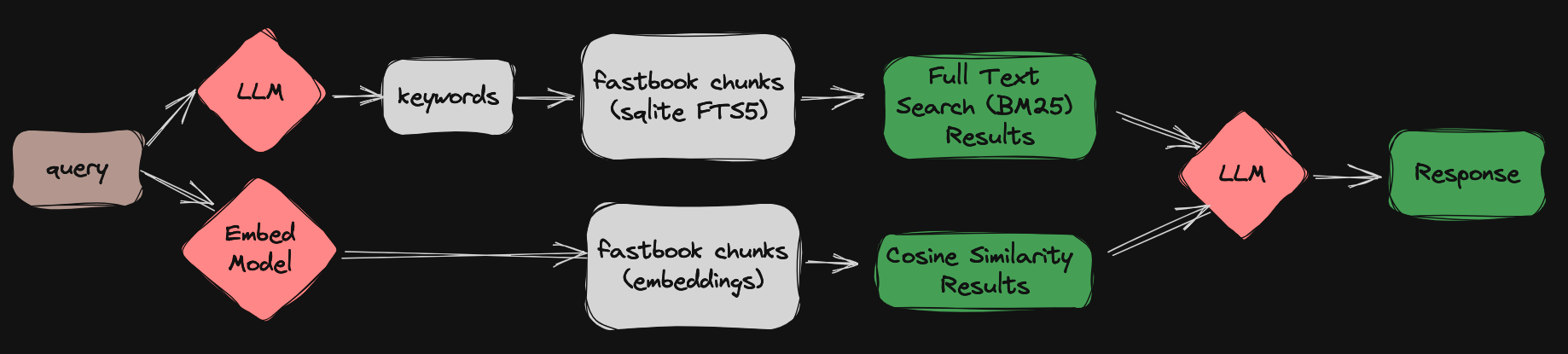

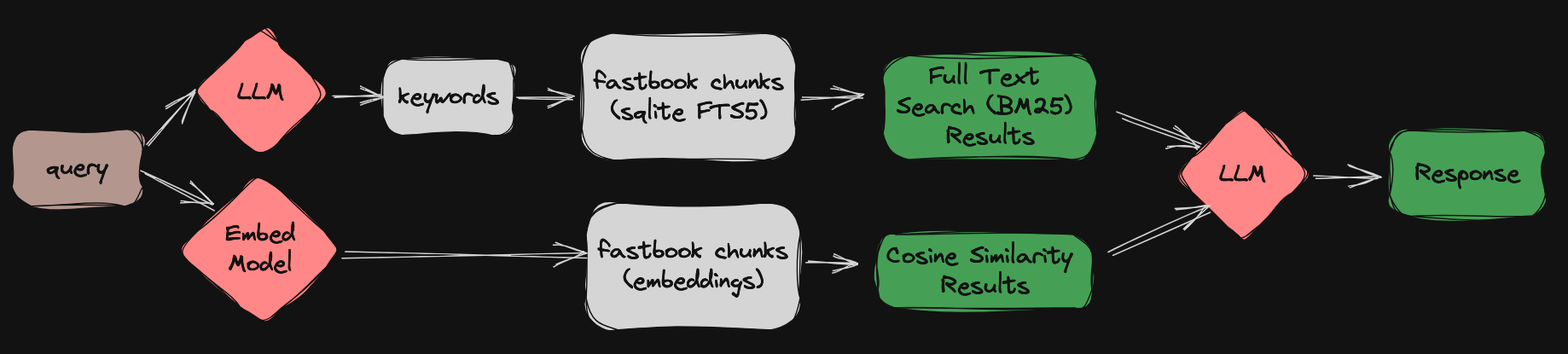

Using Hybrid Search to Answer fastai the Chapter 1 Questionnaire

python

RAG

information retrieval

fastbookRAG

How Does Stable Diffusion Work?

deep learning

machine learning

fastai

stable diffusion

generative AI

Using Full Text Search to Answer the fastbook Chapter 1 Questionnaire

python

RAG

information retrieval

fastbookRAG

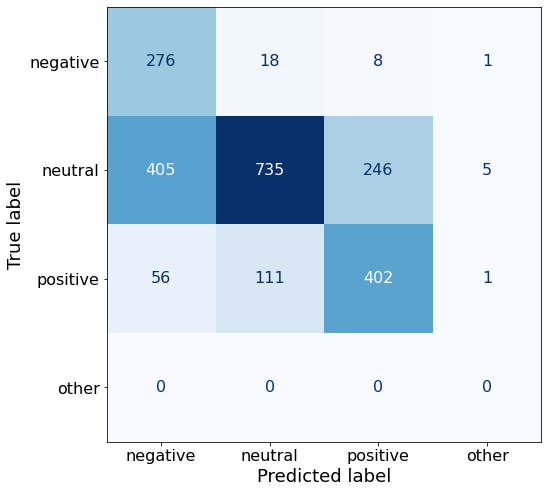

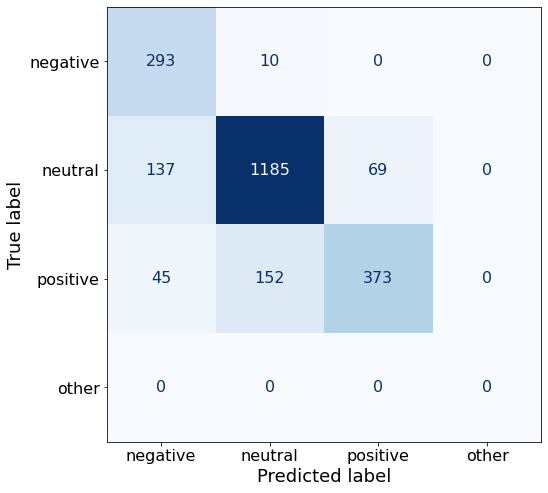

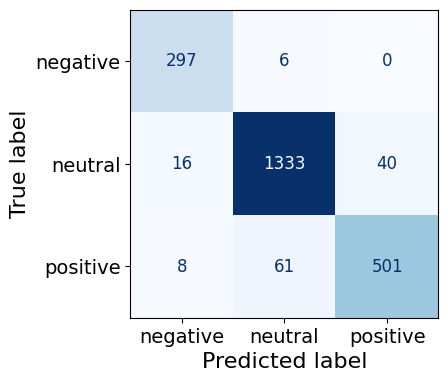

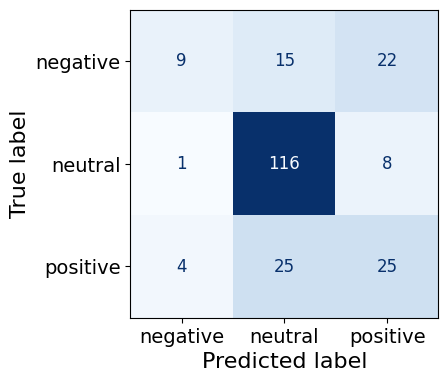

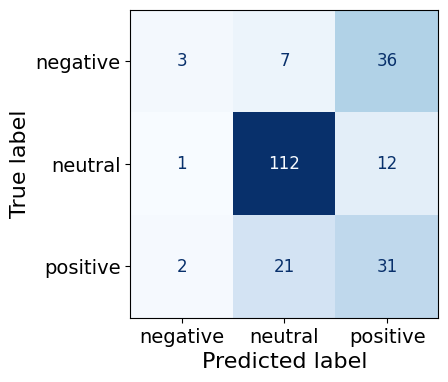

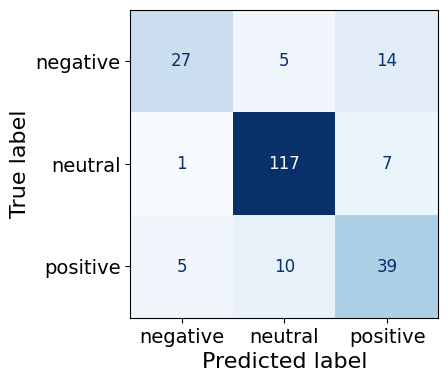

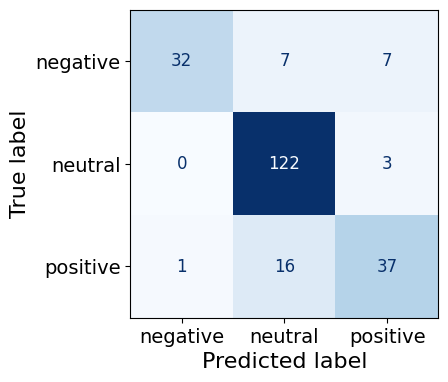

Calculating the Flesch Kincaid Reading Grade Level for the financial_phrasebank Dataset

python

machine learning

deep learning

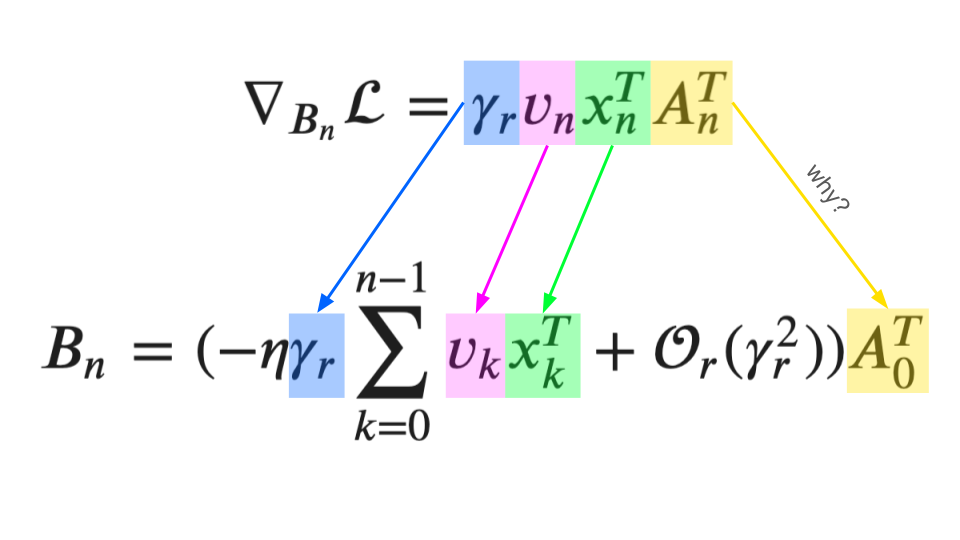

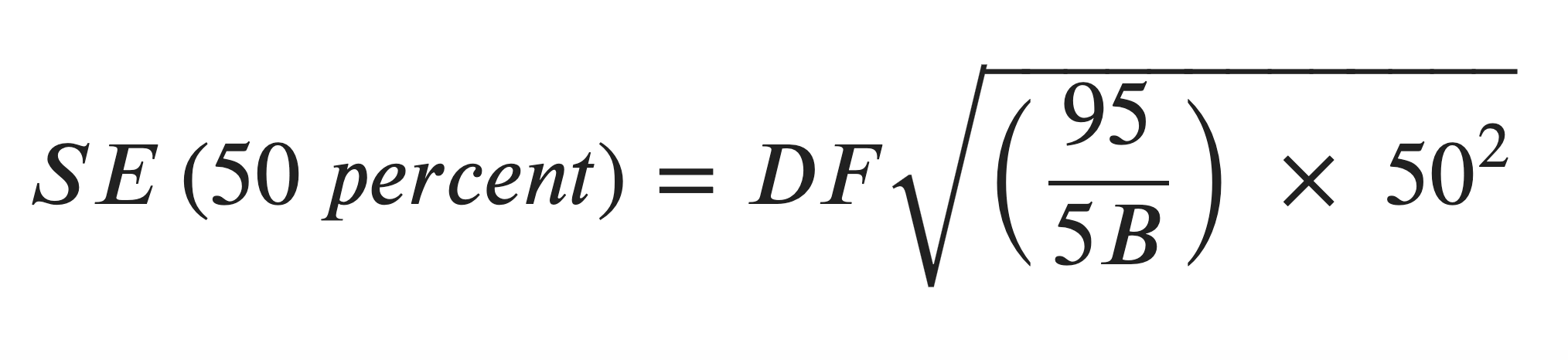

Paper Math: rsLoRA

deep learning

machine learning

paper math

LLM

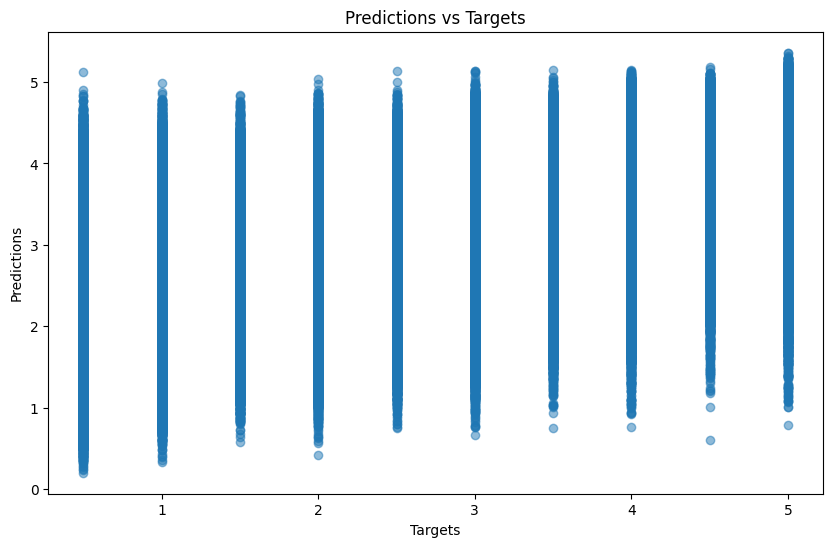

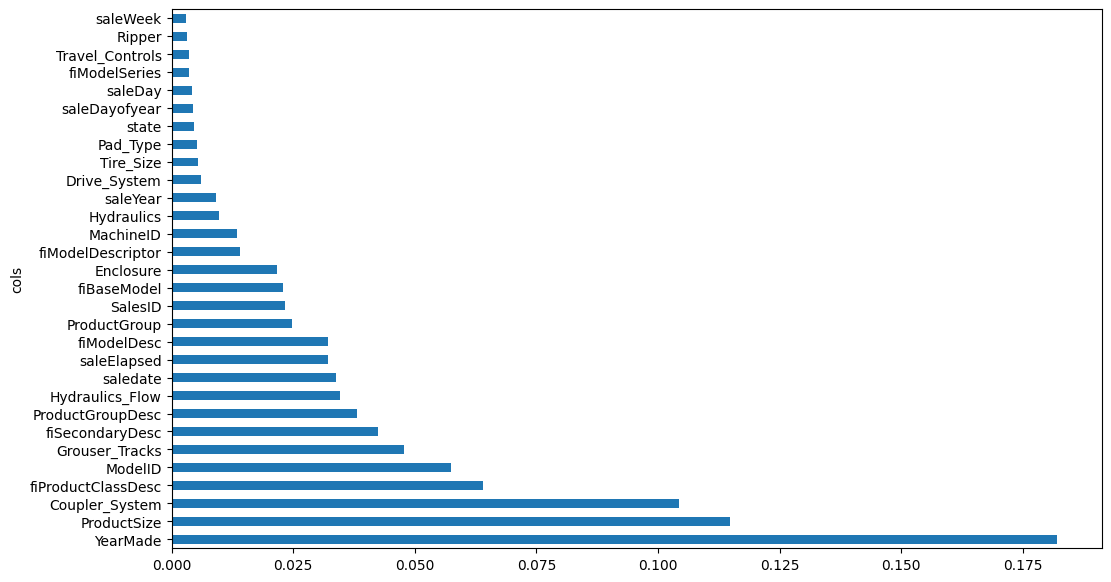

Training Collaborative Filtering Models on MovieLens 100k with Different Weight Decay Values

machine learning

fastai

python

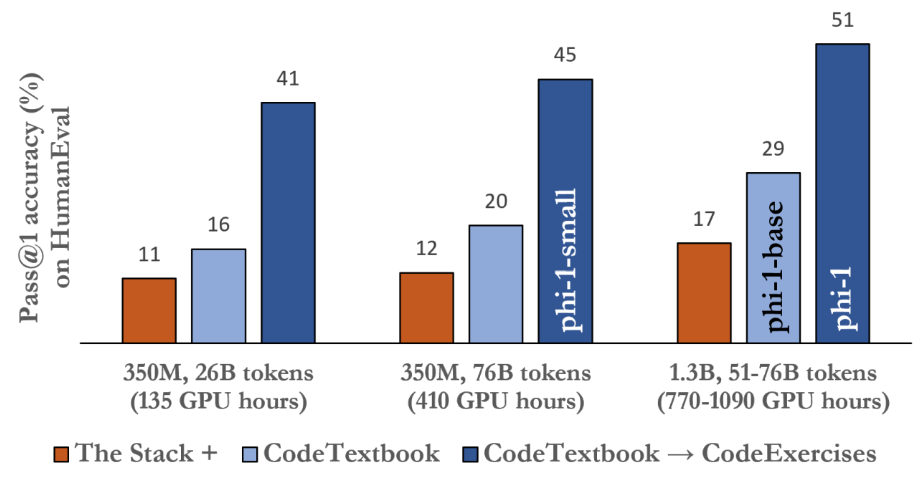

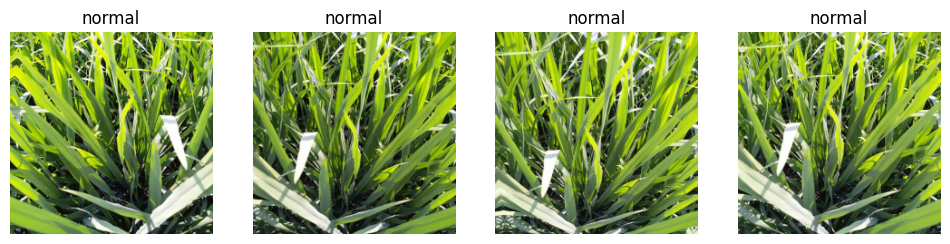

Improving Kaggle Private Score with Multi-Target Classification

deep learning

fastai

kaggle competition

paddy doctor

python

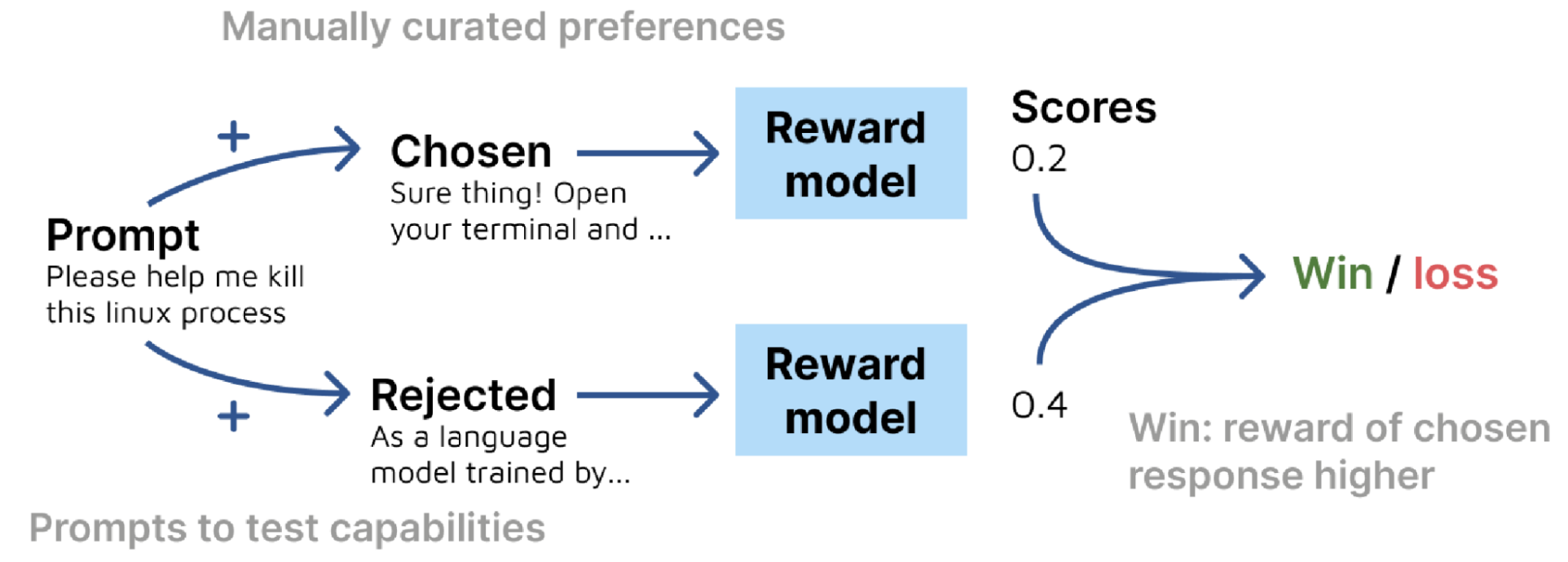

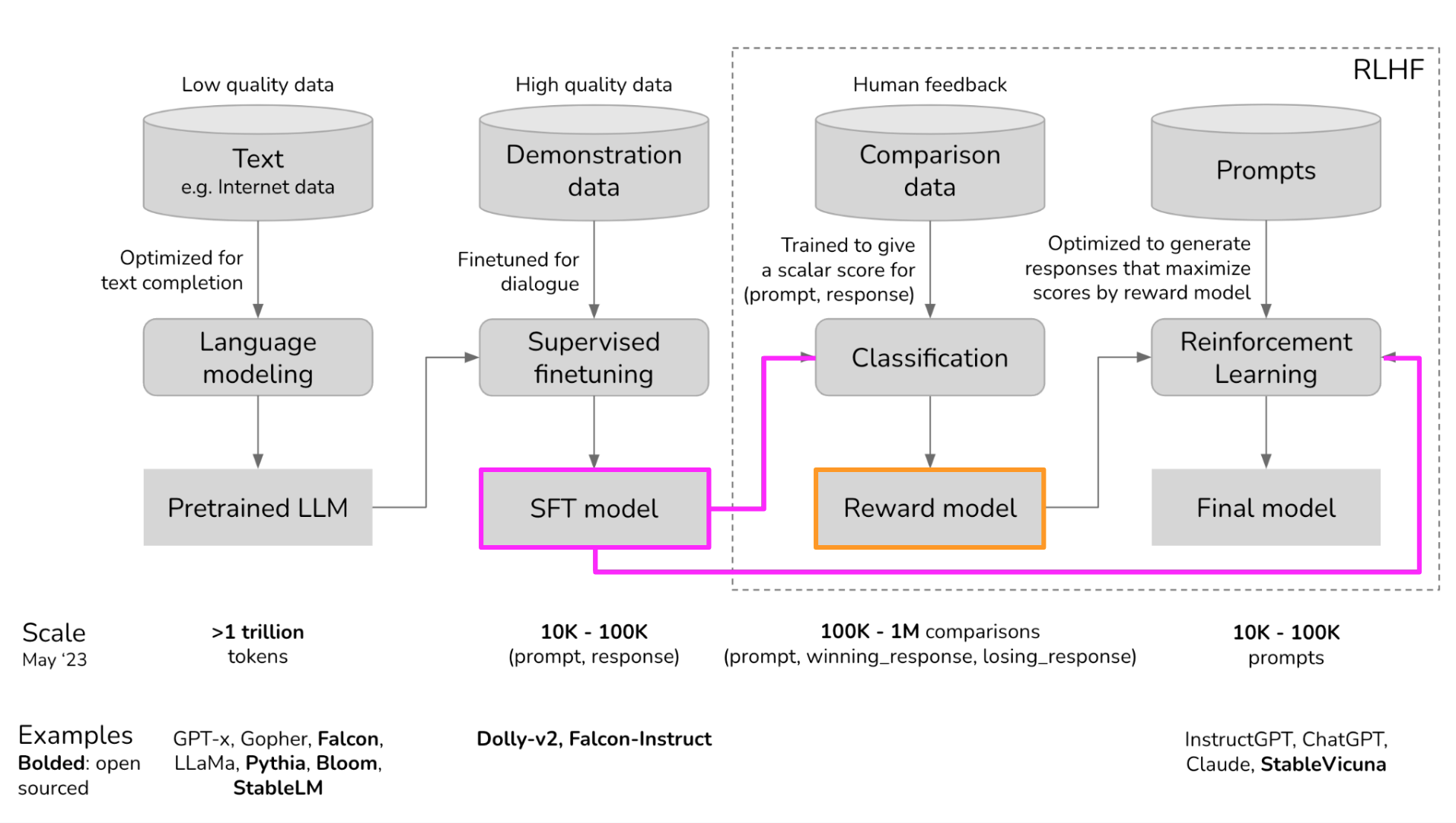

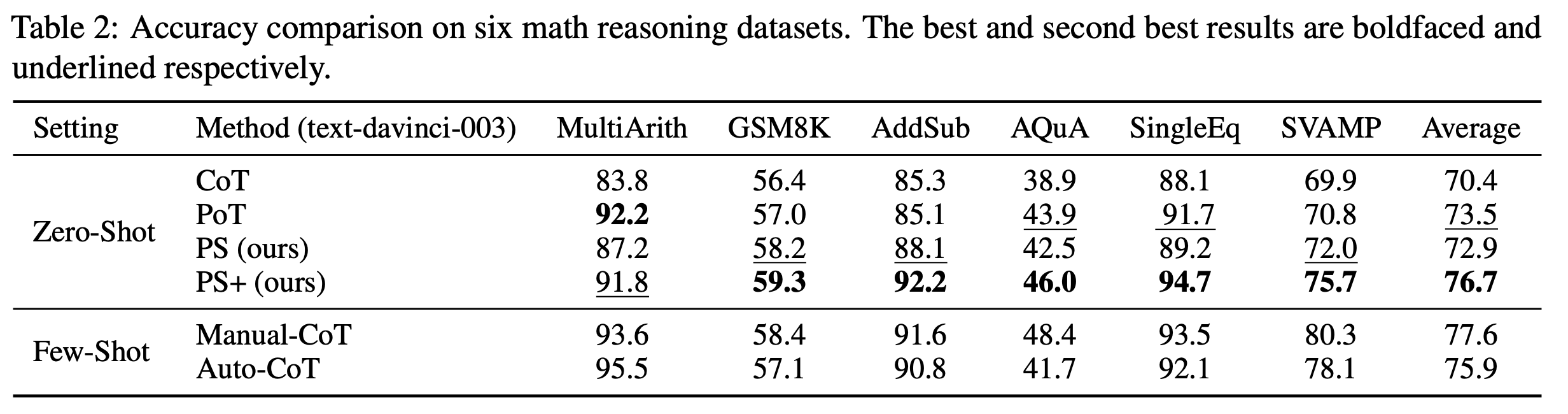

Paper Summary: RewardBench

paper summary

deep learning

LLM

Recap: HMS HBAC Kaggle Competition

fastai

kaggle competition

deep learning

Recap: My First Live Kaggle Competition

fastai

kaggle competition

machine learning

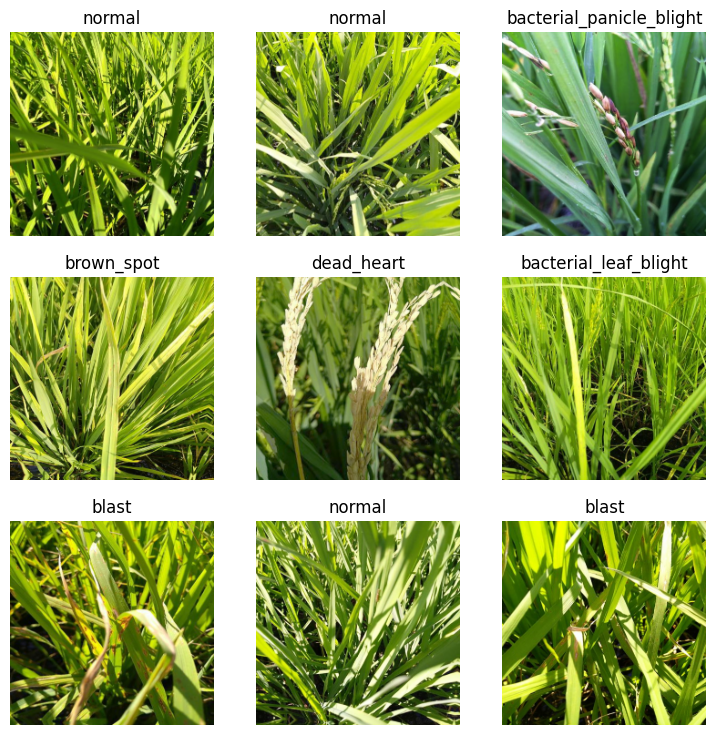

Paddy Doctor Kaggle Competition - Part 8

deep learning

fastai

kaggle competition

paddy doctor

python

Paddy Doctor Kaggle Competition - Part 7

deep learning

fastai

kaggle competition

paddy doctor

python

Paddy Doctor Kaggle Competition - Part 6

deep learning

fastai

kaggle competition

paddy doctor

python

Paddy Doctor Kaggle Competition - Part 5

deep learning

fastai

kaggle competition

paddy doctor

python

Paddy Doctor Kaggle Competition - Part 4

deep learning

fastai

kaggle competition

paddy doctor

python

Paddy Doctor Kaggle Competition - Part 3

deep learning

fastai

kaggle competition

paddy doctor

python

Paddy Doctor Kaggle Competition - Part 2

deep learning

fastai

kaggle competition

paddy doctor

python

Paddy Doctor Kaggle Competition - Part 1

deep learning

fastai

kaggle competition

paddy doctor

python

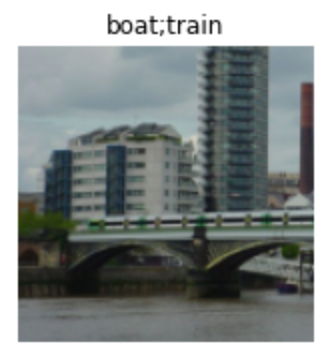

fast.ai Chapter 7:Test Time Augmentation

deep learning

python

No matching items