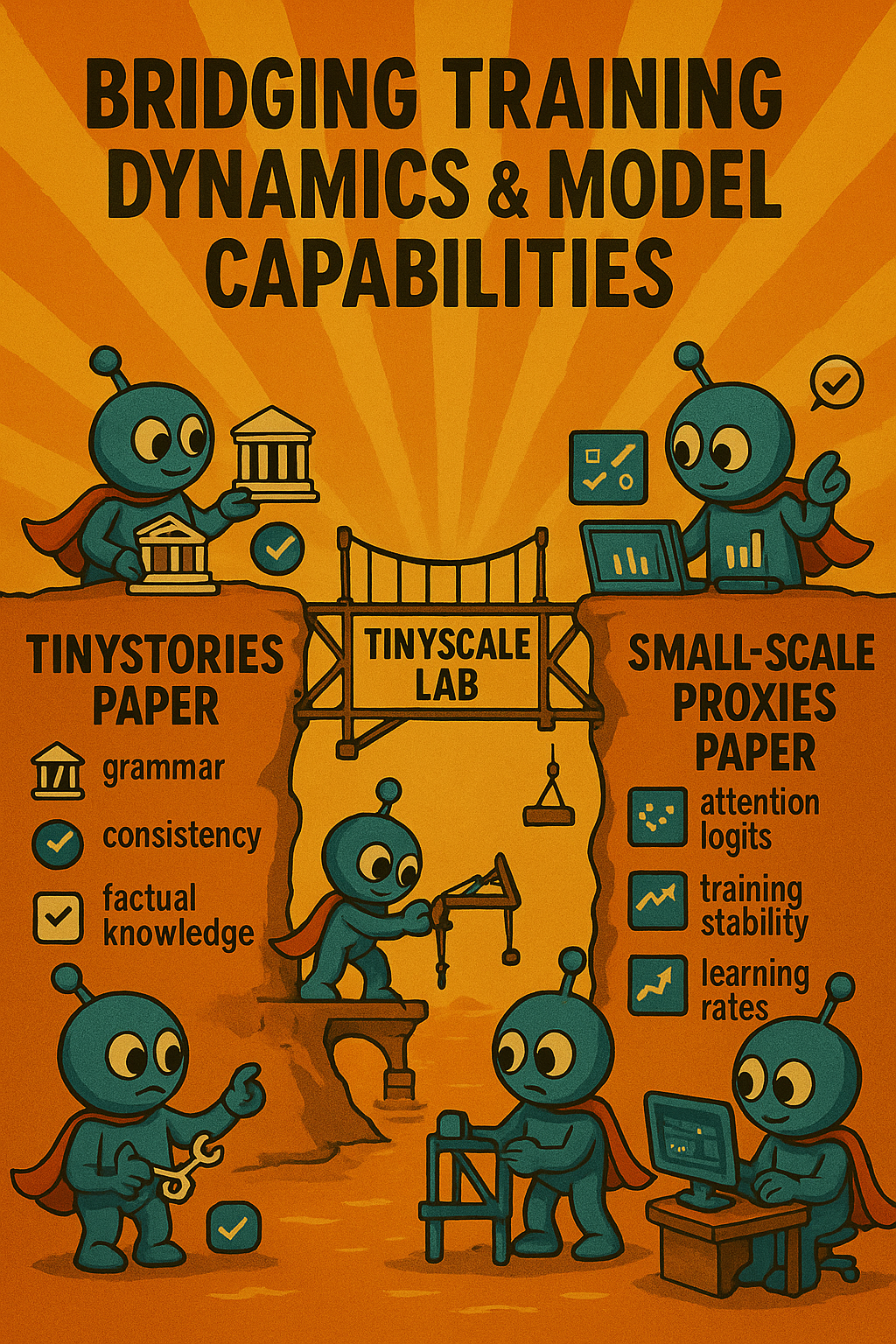

TinyScaleLab: Bridging Training Dynamics and Model Capabilities

Introduction

I’m excited to announce the kickoff of TinyScale Lab, a research project focused on exploring the connection between training dynamics and model capabilities. This research is motivated by two papers that I’ve studied in detail: “TinyStories: How Small Can Language Models Be and Still Speak Coherent English?” by Ronen Eldan and Yuanzhi Li, and “Small-scale proxies for Large-scale Transformer Training Instabilities” by Wortsman, et al.

Most LLM training-related research requires computational resources that are financially out of reach for individual researchers or small teams. At the same time, recent work has shown that tiny models exhibit emergent capabilities (as demonstrated in the TinyStories paper) and exhibit large-scale training dynamics (as shown in the Small-scale proxies paper).

While I don’t claim to be creating a definitive blueprint, I believe this approach—using tiny models as proxies to study phenomena relevant to models of all sizes—represents an underexplored path that could benefit other resource-constrained researchers.

I think this is how most of the world’s potential researchers would need to work. Making ML research accessible to resource-constrained environments isn’t trivial - it’s essential for the field’s diversity and progress.

Research Hypotheses

I’ve developed four main hypotheses that will guide my research:

- H1: Training stability directly affects specific model capabilities in predictable ways.

- H2: Different model capabilities (like grammar or consistency) respond differently to training adjustments.

- H3: Early training signals can predict which capabilities a model will or won’t develop before training is complete.

- H4: Techniques that stabilize training will have varying effects on different types of model capabilities.

I’ve kept these hypotheses general at a high level because I really don’t know what I’m going to learn, but I do have a sense based on the TinyStories and Small-scale proxies papers that there is something around these four elements that I’m going to experience, and I expect to see some relationships.

I want to bridge the TinyStories paper analysis on emergent capabilities (grammar, consistency, factual knowledge, reasoning, etc.) with the Small-scale proxies paper training dynamics analysis (attention logits, training instabilities, learning rates, etc.).

Experimental Design

For my experimental design, I’ve decided to focus on four model sizes:

- ~3M parameters

- ~20M parameters

- ~60M parameters

- ~120M parameters

This follows the TinyStories paper closely, with the addition of a 120M parameter model.

I’ll use the same learning rates as the Small-scale proxies paper, ranging from 3e-4 to 3e-1 with seven learning rates in total: {3e-4, 1e-3, 3e-3, 1e-2, 3e-2, 1e-1, 3e-1}

I’ll implement two stability techniques from the Small-scale proxies paper:

- QK layer norm (to mitigate attention logit growth)

- Z loss (to mitigate output logit divergence)

What will remain fixed across all training runs are the datasets, the number of training steps, and other hyperparameters like weight decay and warm-up steps.

The training dynamics I’ll log throughout training include:

- Logits

- Gradients

- Parameters

- Loss

For each of these, I’ll capture norms, means, maximum values, and RMS values.

The capabilities I want to evaluate are split into three categories:

- Foundational language: Grammar and context-tracking (consistency)

- Emergent capabilities: Factual knowledge, reasoning, and creativity

- Story-related: Plot

The relationship between these training dynamics and capabilities is what I want to explore.

Success Criteria

My success criteria are simple but not easy: establishing clear connections between training dynamics and tiny model capabilities. This work is exploratory, and I’m open to discovering that the relationships might be more complex or different than initially hypothesized.

Risk Assessment

I’ve identified several risks that could impact this project:

- Lack of connection between training dynamics and tiny model capabilities

- Technical challenges in monitoring complex training dynamics

- Sub-optimal parameter usage

- Compute and inference costs ballooning beyond budget

Risk Mitigation

To mitigate these risks, I plan to:

- Shorten the iteration loop

- Ensure evaluations are robust from the start

- Start at the tiniest scale and progressively increase model size

- Implement early stopping to avoid wasting compute

I learned from the fastAI course and community that you want to shorten the iteration loop and ensure that evals are robust from the start. This gives you quick, immediate, robust, clear signal when you get feedback on how your model is performing.

Deliverables

My commitment is to produce:

- Comprehensive research repositories including code, trained models, and detailed datasets (training dynamics and LLM Judge scores)

- Weekly video content and blog posts

- Technical report

- Interactive visualizations

The main thing I want to emphasize is that I’ll be doing this publicly and open-source. All models, code, and findings will be freely available to enable broader participation in ML research.

Timeline and Budget

I’ve broken the project into four phases:

- Phase 1: Eval/Logging Setup, Initial Training Runs (2-3 months)

- Phase 2: Experimental Implementation (3-4 months)

- Phase 3: Analysis & Synthesis (2-3 months)

- Phase 4: Documentation & Finalization (1 month)

At minimum, I think this work will take eight months, and it could go well past a year.

For the budget, I’m estimating: - Training: $1700 (approximately 100 training runs on 25B tokens) - Inference: $200 (using Gemini 2.5 Flash for LLM Judge scoring) - Total: $2000

At this point, I’m considering whether it makes sense to buy my own GPU rig. If this is going to cost $2,000, why not spend a little more or twice as much and get a GPU rig that I can own? There are a lot of variables when it comes to budget and timeline, so I’m going to take it one week at a time and make adjustments as necessary.

Closing Thoughts

To recap, TinyScale Lab aims to:

- Bridge training dynamics and model capabilities to understand what makes tiny models effective

- Create a systematic framework for understanding how training choices affect specific model capabilities

- Demonstrate that meaningful ML research is accessible with modest computational resources

- Open-source all models, code, and findings to enable broader participation in ML research

As Nick Sirianni (championship winning coach of the Philadelphia Eagles) said, “You cannot be great without the greatness of others.” I truly stand on the shoulders of giants, especially the authors of the TinyStories and Small-scale proxies papers. Without their work and contributions in the open source space, I would not be able to even approach this kind of research.

If someone with similar interests sees this work and it inspires them, or they can use something I built that saves them time, saves them money, or gives them insight–that would be the best reward that comes out of this work.

I hope you’ll follow along with this journey. I’ll be keeping everything in the TinyScale Lab playlist on my YouTube and will tag related posts on my blog.